While Vignette works great for having large scale conversations about a whole event’s worth of photos, it requires a significant amount of time and a desktop computer. I decided that a shorter and more focused conversation would be a good way to make this reflective interaction more accessible and approachable. So, I stripped Vignette down to its core — asking questions about a single photo.

You can try out this brief experience by tweeting or DMing a photo at the Twitter account @SnapshotReflect. A few dozen lucky people also got to visit a physical version of this, the "Reverse Photo Booth," at the 2017 BuzzFeed Open Lab Showcase.

The first version

Snapshot Reflect began a few weeks ago when I found Cheap Bots Done Quick, a website by George Buckenham. Using a super approachable text expansion language called Tracery (created by Kate Compton), Cheap Bots Done Quick makes it very easy to create Twitter bots that tweet or respond to @s automatically. When I discovered this tool, I immediately wanted to get my hands dirty and explore one idea for what a micro-scale Vignette interaction might look like.

Tracery works by defining a “grammar” of possible sentences and variations. Special terms are defined within this grammar and expanded randomly to generate unique output. For example, part of the grammar of @SnapshotReflect looked like this:

Each term sandwiched in #s will be expanded to one of several alternate phrases, as defined later in the grammar. For example, #took# could become “took,” “captured,” “photographed,” “shot,” or “recorded.” #origin# is a special term that defines the default expansion point. Therefore, this example grammar could generate both “What did you feel just before you captured that photograph?” and “How did you feel after you took that snapshot?” They are clearly very similar questions, but by encouraging you to create a variety of possible text expansions, Tracery makes it easy to create interesting, diverse output for a bot.

The first version of Snapshot Reflect generated a random response using a grammar like the one above (but longer) to any message it received. This meant that the bot asked questions about a photo even if the message didn’t contain an image, and it didn’t have any way of keeping track of who it was talking to or what it was talking about.

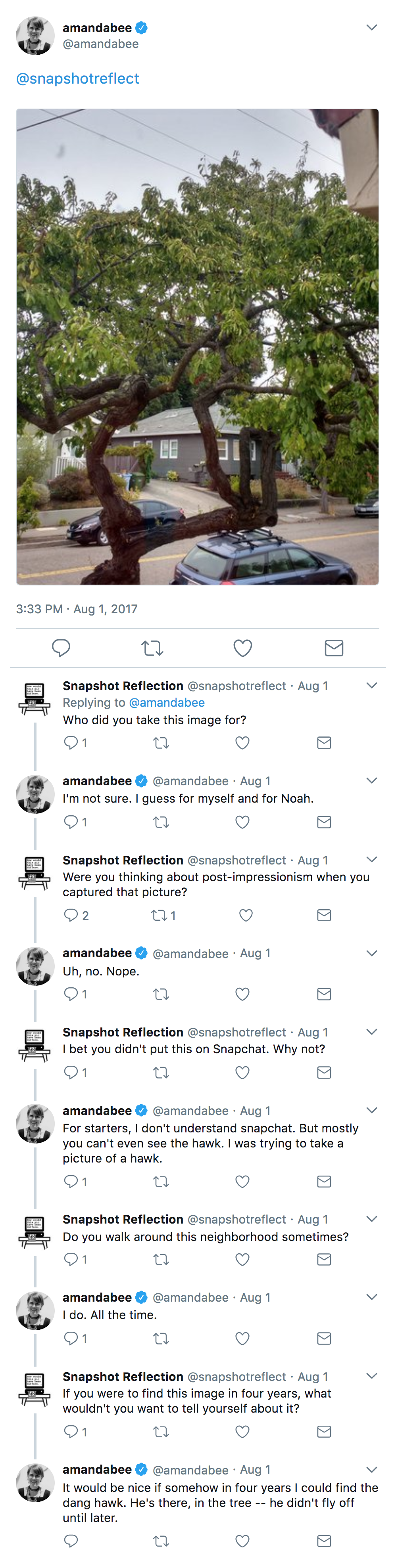

Sometimes these interactions went well...

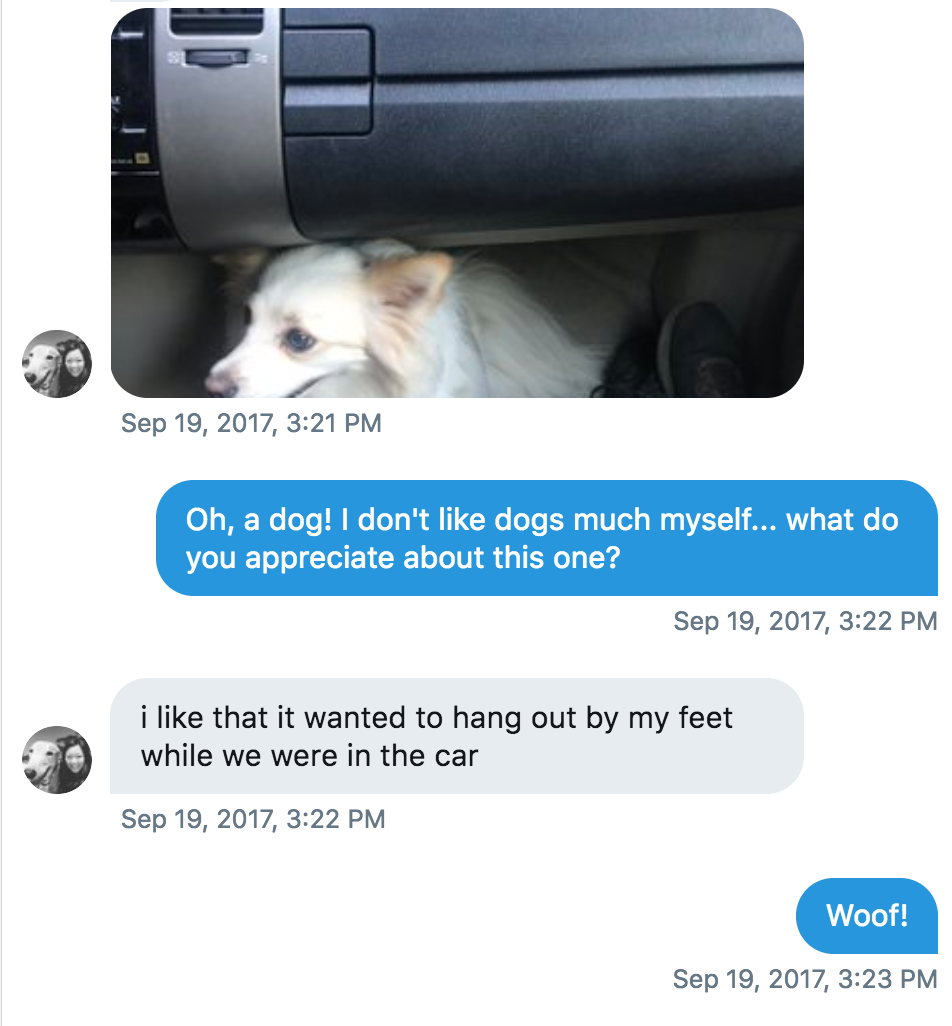

But not always.

I highly recommend developing your Tracery code for Cheap Bots Done Quick in a local Git repository, or at least saving a copy of it now and then. It’s nice to look back at old versions of your text generation, and it can be shockingly easy to lose track of what changes you’ve made. Unfortunately, I learned this the hard way, so the initial version of Snapshot Reflect has been lost in the mists of time.

Avoiding repetition

The second thread above illustrates a particular failure case — Snapshot Reflect had a tendency to repeat itself. This was because I baked in several different ways to ask the important question, “why did you take this photo?”, and Cheap Bots Done Quick generated a random reply from the top each time. In order to avoid repeating itself, the bot needed a way to keep track of conversations and exclude phrases that it had previously used. This meant moving away from Cheap Bots Done Quick.

I rewrote the bot in Python, using a port of Tracery, so that I could keep building off of the same grammar. When a new conversation begins, the bot stores a record in a MongoDB database on its server. It uses this record to keep track of what particular question categories it has already used, and will only use each type of question once during a conversation. This produces much more dynamic conversations.

Asking better questions

The other issue with the first iteration of Snapshot Reflect was that the questions were not related to the provided image at all. It would be fun if they had some relevance to the image content!

While Vignette runs a DIY version of Google’s open source Inception network to identify image content, Snapshot Reflect takes a simpler (though slightly pricier) approach — the Microsoft Computer Vision API. This API tags images with a list of keywords and Snapshot Reflect prioritizes the tags that come back for any one image to choose what to talk about. Though many pictures tagged with “dog” might also be tagged with “outdoors,” Snapshot Reflect cares more about “dog” because it’s probably the more relevant part of the image. An image tagged with dog would expand a custom list of questions: "dog": ["#Awwname#", "#Dog#", "#Dogappreciate#", "#Dogsound#"]. Each one of the terms in #s corresponds to a particular category of questions that will only be asked only once, for example: "Awwname": ["Aww, what's their name?", “Cute dog, what do you call them?”].

The Reverse Photo Booth

For the end-of-fellowship BuzzFeed Open Lab Showcase, I wanted to encourage other people to interact with Snapshot Reflect while also making the chatbot more tangible. So I built a "Reverse Photo Booth" — it’s "exactly like a normal photo booth," except instead of taking a photo with you when you leave, you provide a photo when you enter.

Users text a photo to the Reverse Photo Booth's phone number. The same question generator that powers the Twitter bot generates a short dialog with the person inside the booth.

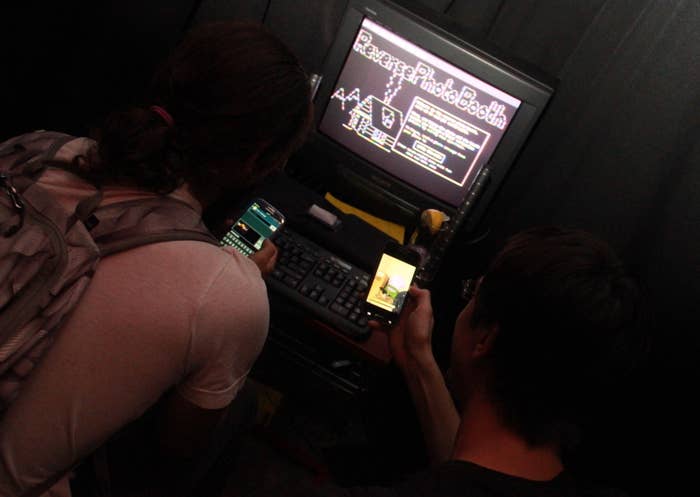

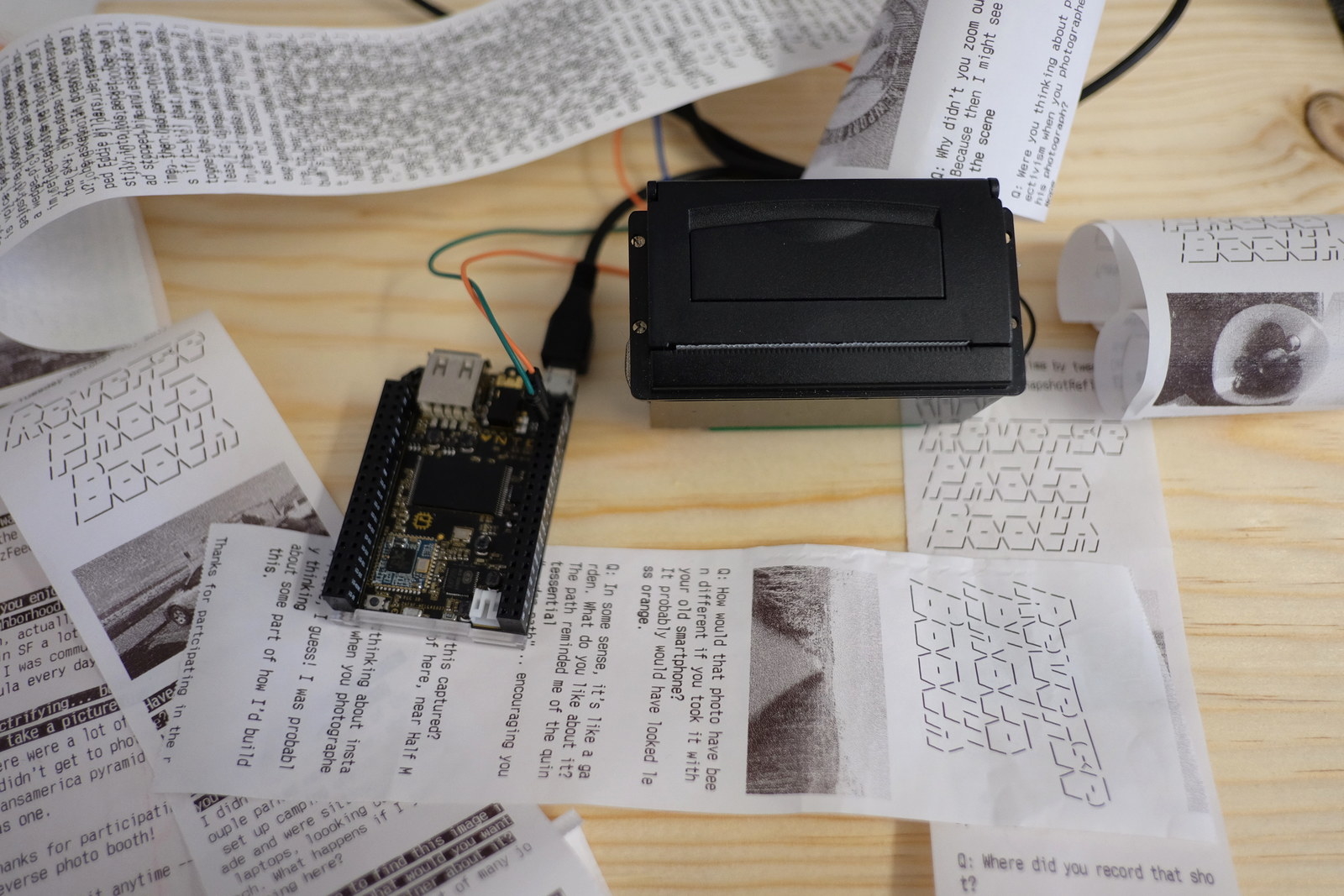

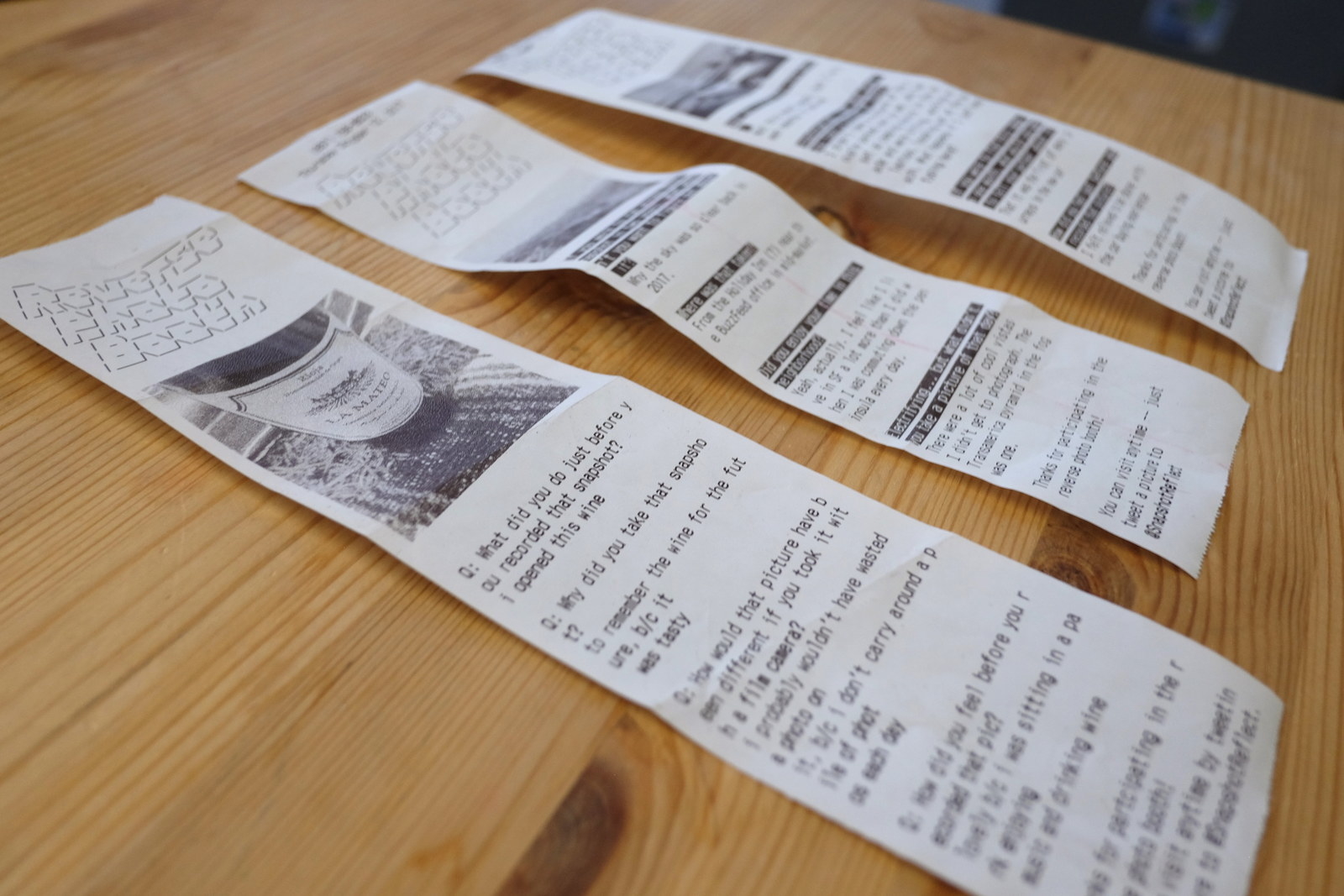

The Reverse Photo Booth used a CHIP to drive an ancient CRT TV with an old-school text based interface. This was an intentional decision to play up the deficiencies of the question generator and the stilted, bot-like character of the interaction. The Reverse Photo Booth also printed out a copy of the photo and a log of the conversation on a little thermal printer.

The promise of a tangible gift encouraged many people to try out the chatbot. While the questions were not always perfectly chosen or phrased (send a photo to @SnapshotReflect see what I mean), getting to take something home made the experience more lasting and valuable.

If you want to make some version of this yourself, or just want to learn more about Tracery and Twitter bots, the full source code for this project is on GitHub.

And if you want to talk to a bot about a photo, @SnapshotReflect takes DMs or straight @s.