Part of my research project for the Open Lab has been studying the alt-right through digital ethnography, and that means going to where they are: on Twitter, on Voat, on Gab.ai, and on Reddit. I created accounts for each of those sites to see what they are seeing. Starting in December, I maintained an egg account on Twitter that followed Donald Trump, part of his staff, Bannon, Breitbart, Breitbart writers, and almost every account Richard Spencer is following, excluding the mainstream or liberal journalists he follows. The feed is about what you’d imagine--Cernovich calling people “cucks”, Baked Alaska making jokes, Spencer talking about being punched, and a lot of wild Pepes.

What would a new political supporter of the alt-right see and what content would be served up to them organically from browsing and following on Twitter? I took a few weeks to slowly follow different accounts. I wanted to see what would organically appear, so I could sift through who was mentioning who, and follow the patterns and networks that these accounts were following. While the experiment was to see what was popular within alt-right accounts, I needed to also follow major accounts they were mentioning, such as elected officials (like POTUS), and major news outlets or blogs that they would be referencing. I started by following Richard Spencer, The Blaze, Kellyanne Conway, Stephen Bannon, Donald Trump and Breitbart News. From there, I searched through hashtags like the #altright and #maga which lead to other hashtags like #whitegenocide #tradlife #altright #whitepill #whitegirlsaremagic #maga #huwhite. Then I slowly started to follow more and more accounts from those hashtags.The goal was to now see whose accounts were retweeted and engaged with from these hashtags and accounts I was following. Then I folded in everyone that Spencer was following.

One of the ways to understand a community is to see daily what kind of information and in-jokes and discussions they create, from following hashtags to seeing what is retweeted. Some tweets are retweeted by multiple accounts so I would see them repeatedly in my timeline. What's most fascinating is the accounts Twitter started to suggest I follow next.

Twitter has a pretty "okay" recommendation engine, it has moments of error, and it's easy to see when the edges are exposed of the recommendation algorithm, but on the whole, it does a good job of finding things you might like based on what you already like. But Twitter's recommendation pattern is good at replicating patterns- once you follow enough users, the reco-algo starts to get more accurate. Here's what I noticed as my egg account followed Trump, his staff, general Republicans and now white supremacists and white nationalists on Twitter.

More recommendations offered on 12/30/16, so three days after my first recommendation email.

@lukingsoft had just two tweets, and 18 followers. Drudge Report is obviously a thing and so is Team Trump. Twitter suggests really popular accounts to follow for new users, so Team Trump isn't a crazy suggestion and selecting a news outlet like either the Blaze or Breitbart presumably triggers the algorithm to suggest similar outlets like the Drudge Report. That kind of recommendation makes sense. If you're following the Blaze, you probably don't want to follow a news outlet like BuzzFeed. Recommendation systems aren't that that hard in space like Twitter. Once there's enough data to establish the overlapping networks, aka who you follow and follows you, that a user exists in. Out of all the above suggestions, I only ended up following Team Trump because of it’s large follower count and the content it was retweeting; basically, a lot of regular users with the #MAGA hashtag.

The Second recommendation email is all Team Trump specific fan accounts.

So...I followed none of the above.

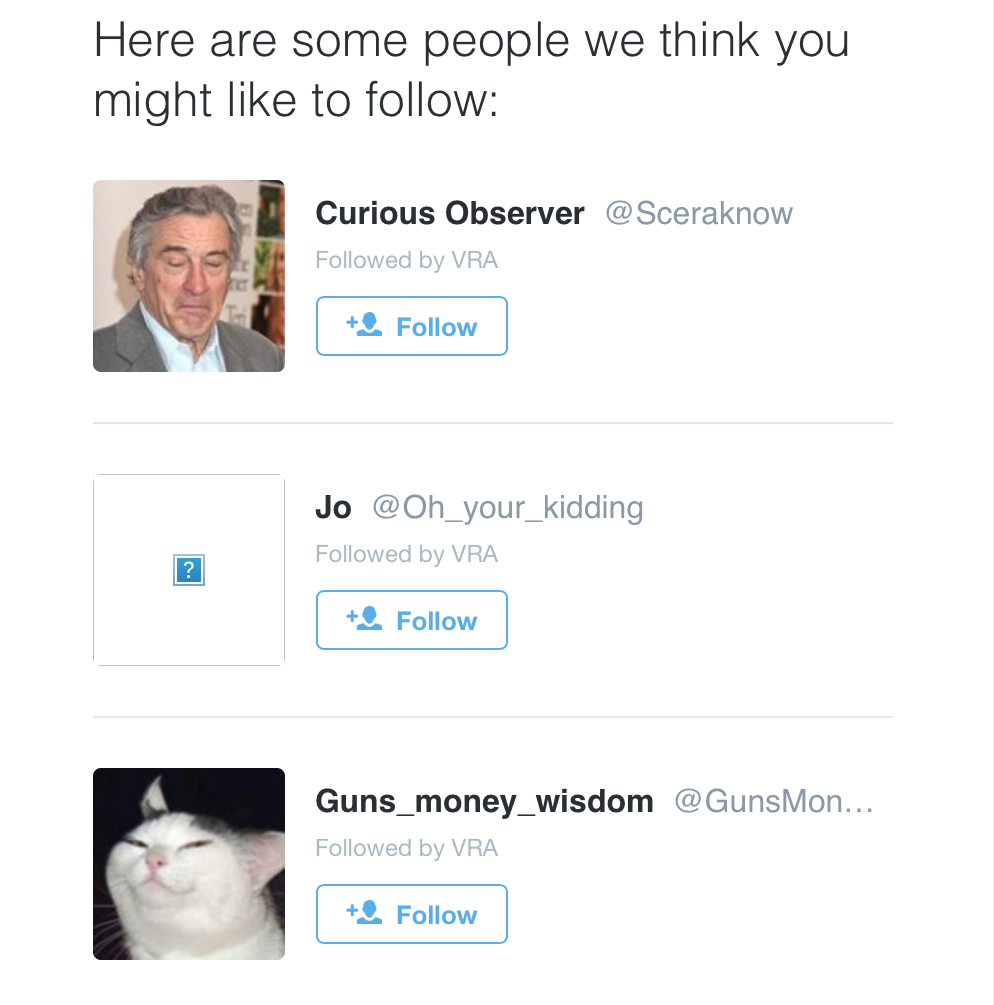

The third recommendation email I received

Also, followed none of these

@VRA is one of the first accounts I followed, which stands for the Virginia Republican Alliance. I’d found them by searching for #TCOT, an acronym for Traditional Conservatives On Twitter, a hashtag used by similar users who also tweet with the #altright hashtag. The algorithm’s third round of suggestion didn’t include any power users or bots, but they just...aren't used that often. @Sceraknow is a fairly standard account- obliquely racist, the full name (“Curious Observer”) feels libertarian but the user routinely posts anti-Muslim memes. @Oh_your_kidding's bio states, "#Christian #IStandwithIsrael There is nothing in the world better than living in the country with a big front porch! RT are not endorsements." @GunsMoneyWisdom retweets majestic looking Trump memes.

More recommendations offered on 12/30/16, so three days after my first recommendation email.

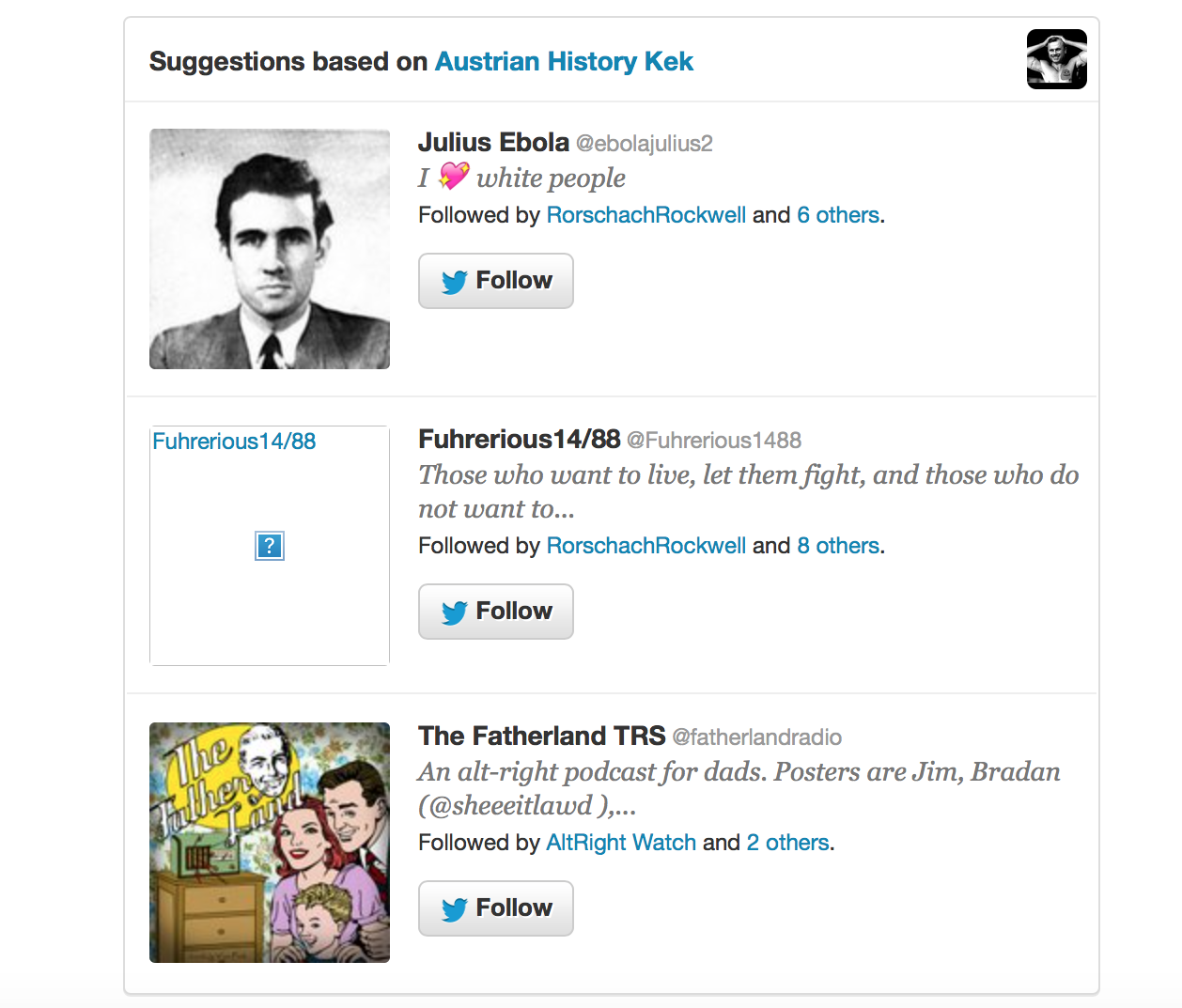

Okay, so these I did follow. Because they had larger follower accounts and were very obviously alt-right by either stating so or retweeting a lot of alt-right content.

Okay, so now the recommendations are starting to get alt-right specific, and white nationalistic. By the time twitter recommended Julius Ebola, Fuhrerious14/88 and The Fatherland TRS, I had followed about 50 accounts that are explicitly alt-right. But this is just three days into my new Twitter account and the suggestions are definitely less 'traditional conservatism' and more KEK flavored. @ebolajulius2 and @fuhrerious1488'ss accounts have already been suspended, but @Fatherlandradio is still going strong.

This is what Twitter thinks my account is interested in: a blatant neo-nazi. The “14/88” in @fuhrerious14/88 is a bit of neo-nazi code -- H is the 8th letter of the alphabet so “88” is a discrete abbreviation for Heil Hitler , while “14” refers to a 14 word slogan, "We must secure the existence of our people and a future for white children," The ADL calls it the "most popular white supremacist slogan in the world."

A few more suggestions from Twitter, you get the point.

My big takeaway? If you’re really excited about the alt-right, Twitter’s recommendation algorithm is really good at helping you find more of the same.

A main part of my research is ethnography and seeing how different groups are chatting within social networks and how their extended networks overlap. Because I'm studying neo-nazi accounts, Twitter's recommendation is doing it's job, and agnostically recommending accounts based off the patterns of who I follow. And from the above “here are accounts you may be interested in” emails, it seems to be doing that pretty well.

Open Lab for Journalism, Technology, and the Arts is a workshop in BuzzFeed’s San Francisco bureau. We offer fellowships to artists and programmers and storytellers to spend a year making new work in a collaborative environment. Read more about the lab or sign up for our newsletter.