Last month Google published "Inceptionism", an article about their latest experimentation with a computer program that attempts to detect and re-create patterns in images using similar techniques to the human brain.

In the same way our brain identifies shapes and forms in clouds in the sky, Google's artificial neural network has been trained to recognise common features such as doors, dogs, and bicycles. The result is a new image, enhanced with information from what the network thinks it has seen.

Each layer of the program deals with features at a different level of abstraction. As the article explains, the first layer looks for information such as edges, surfaces, and orientation. Intermediate layers interpret the basic features to look for overall shapes or components, and the final layers recognise high-level features like the human body, creating complete interpretations.

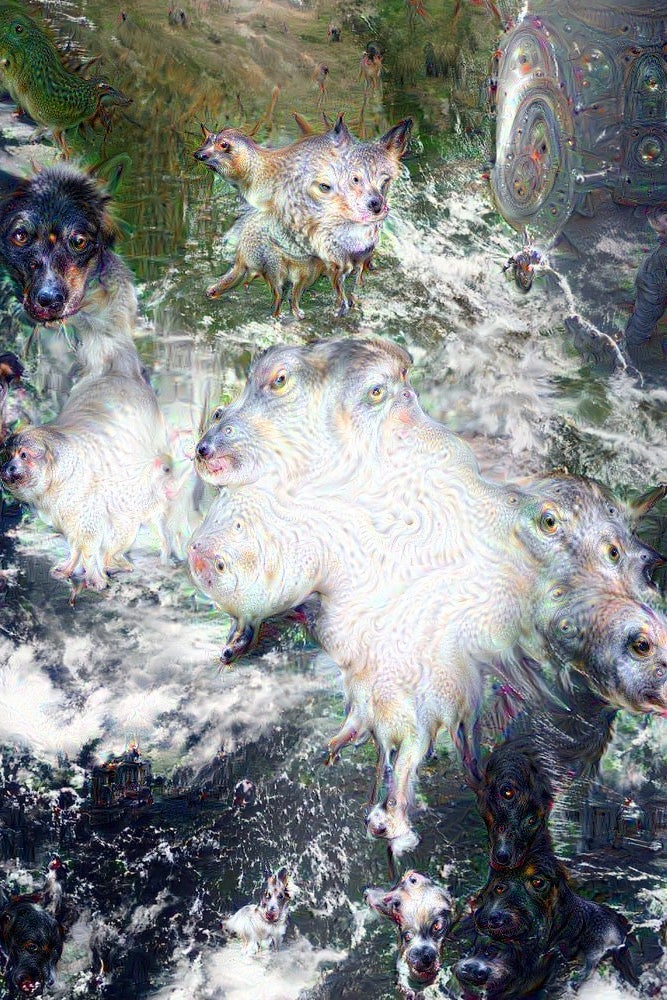

Google went on to open-source the code under the name "deepdream", and the internet went on an acid trip, producing a myriad of beautiful, sometimes unsettling, and always strangely compelling images, GIFs, and videos.

Keen developers and web services quickly put up portals to allow people to explore deepdream themselves; however, it is an intensive process and many services were quickly overwhelmed. We wanted to get a thorough insight in to what deepdream was capable of, so Paul, a BuzzFeed developer, set it up the hard way.

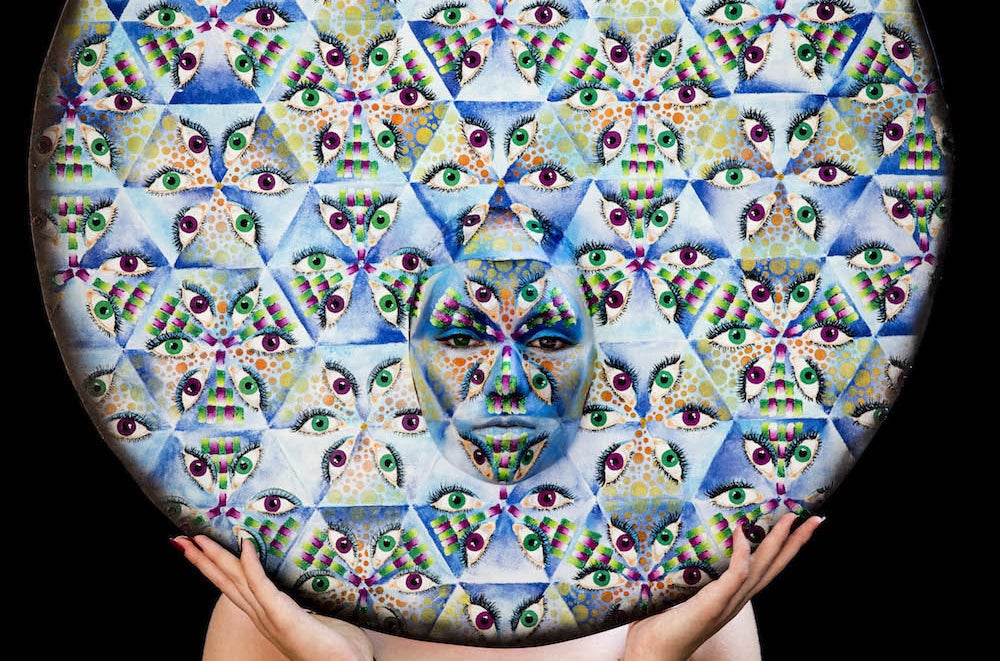

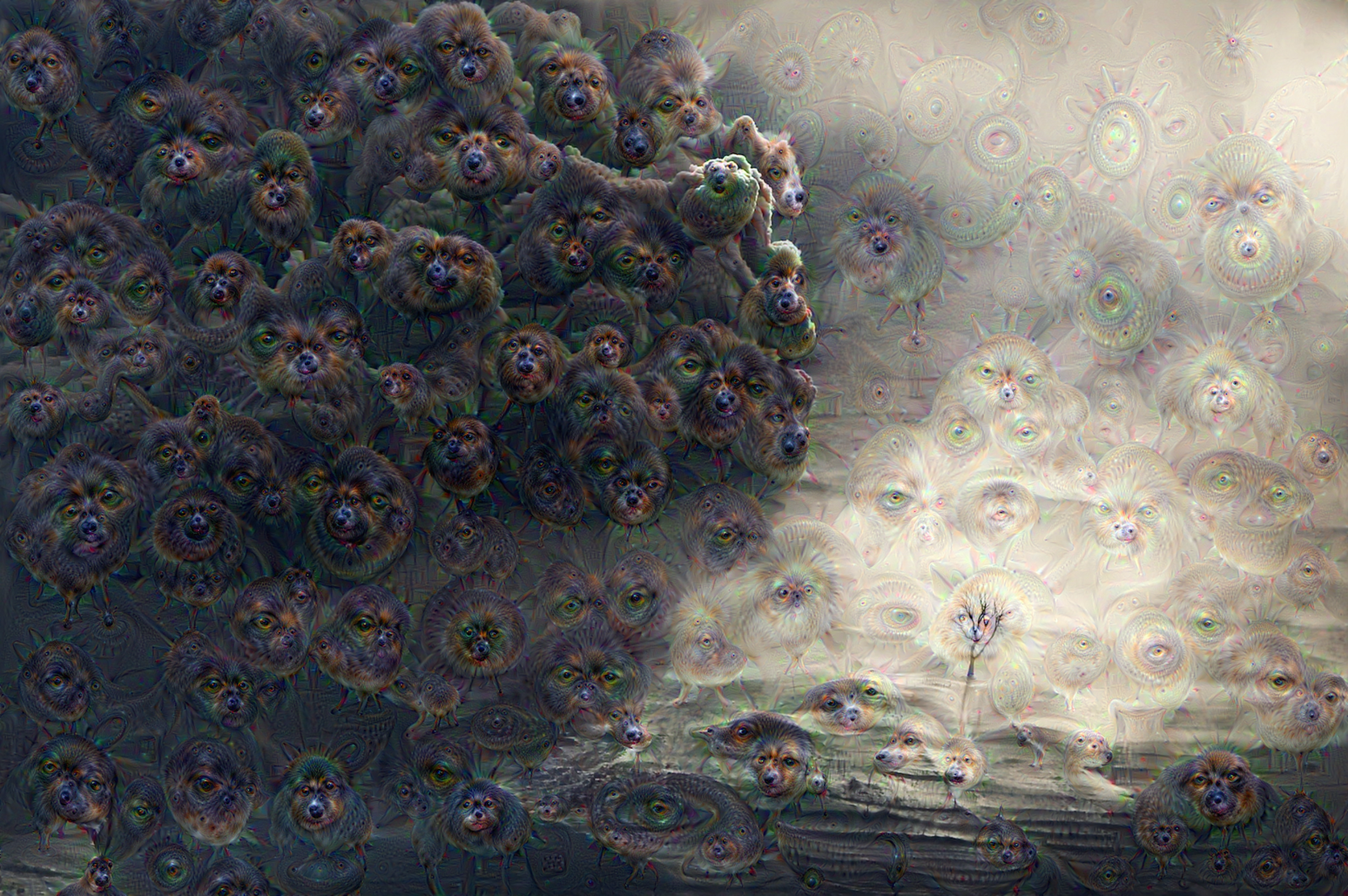

Here are some examples of peoples' #deepdream creations on Twitter:

A rare photography of The Puppyslug Nebula from the Hubble Telescope. #deepdream

walking in sand #deepdream

#deepdream

#deepdream manifested a Darwinian menagerie on the shore, boats on an ocean, and a cosmic web of life in the sky.

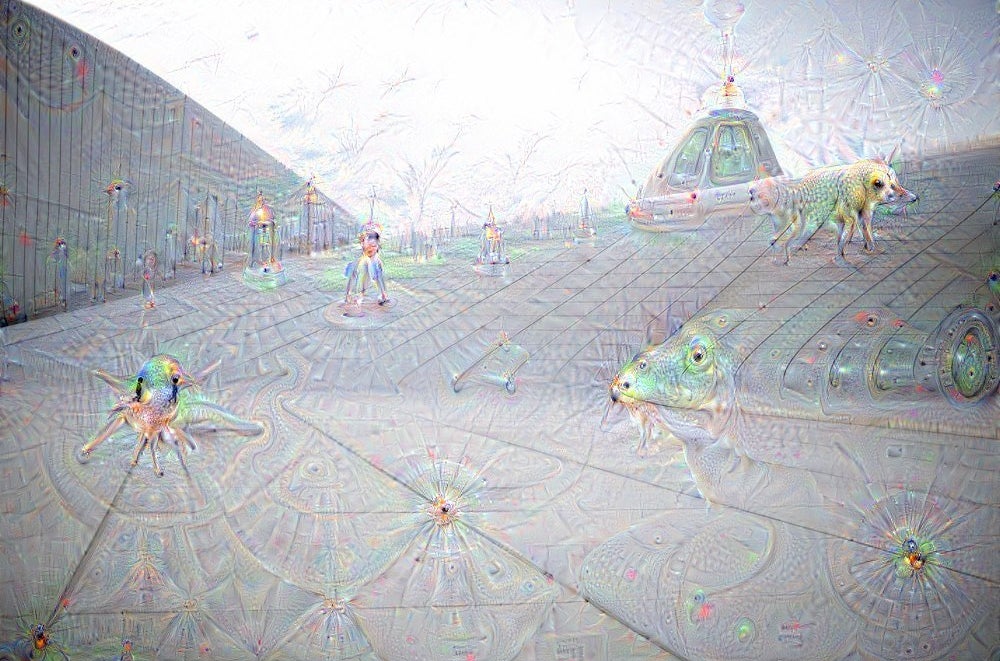

First, we wanted to get a feel for how deepdream responded to a variety of different images, using the default filter - "inception_4c/output". This would guide us on which kind of image could be made more beautiful, which induced the most vivid and abstract "dreams", and if any simply did nothing at all.

There is little change to an image with lots of small detail.

However, deepdream excels in analyzing a large surface or shape of a similar colour and with little detail.

Now that we had a good idea as to how deepdream responded to varying levels of detail, we went on to run a single image though all 54 filters available to us. This would tell us which filters produced the most interesting results.

The variety of different interpretations the network produced was stunning. Combining our knowledge of likely effective images and 13 filters that produce interesting results, we ran a selection of images through deepdream at high resolution. Our machine took a 48-hour processing nap; here are the dreams it had:

This is our final selection of images, each run through our favourite four filters.

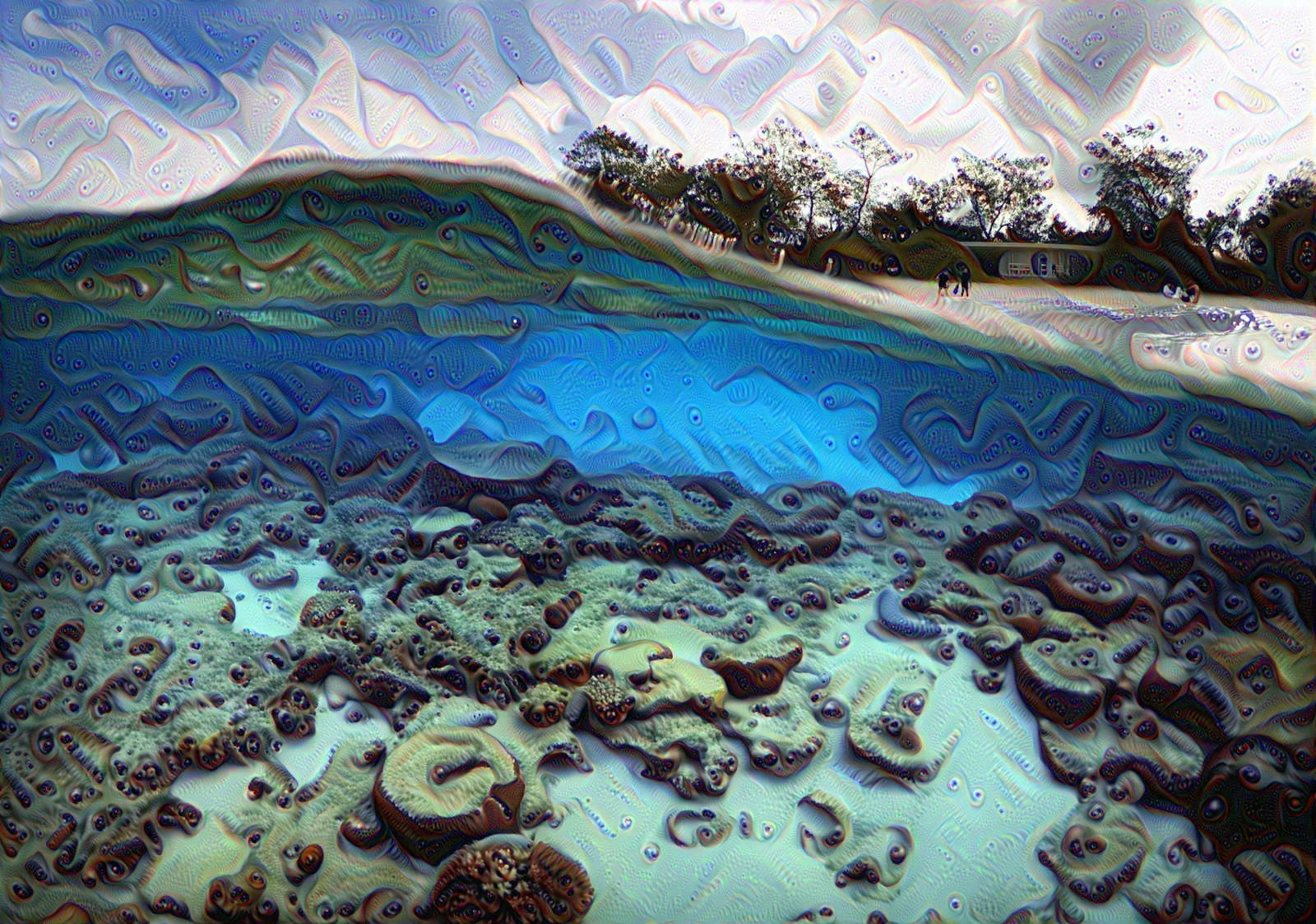

Here is a photo by David Gray of a turtle digging for food among coral in Lady Elliot Island's lagoon in Australia.

This is it run through filter conv2/3x3.

inception_3a/3x3

inception_3b/output

inception_4d/pool

Here is a photo by Ali Al Qarni of Muslims praying at the Grand Mosque in the holy city of Mecca during Ramadan.

This is it run through filter conv2/3x3.

inception_3b/output

inception_4b/pool

inception_4d/pool_proj

Here is a photo by Beawiharta of ash rising during an eruption from Mount Sinabung volcano in Indonesia.

This is it run through filter inception_3a/3x3.

inception_3b/output

inception_4b/pool

inception_5a/pool_proj

Here is a photo by Warren Little of Pandelela Rinong of Malaysia diving during the semifinal at the diving world series in Dubai, United Arab Emirates.

This is it run through filter inception_3a/pool.

inception_3b/output

inception_4b/pool

inception_5a/pool_proj

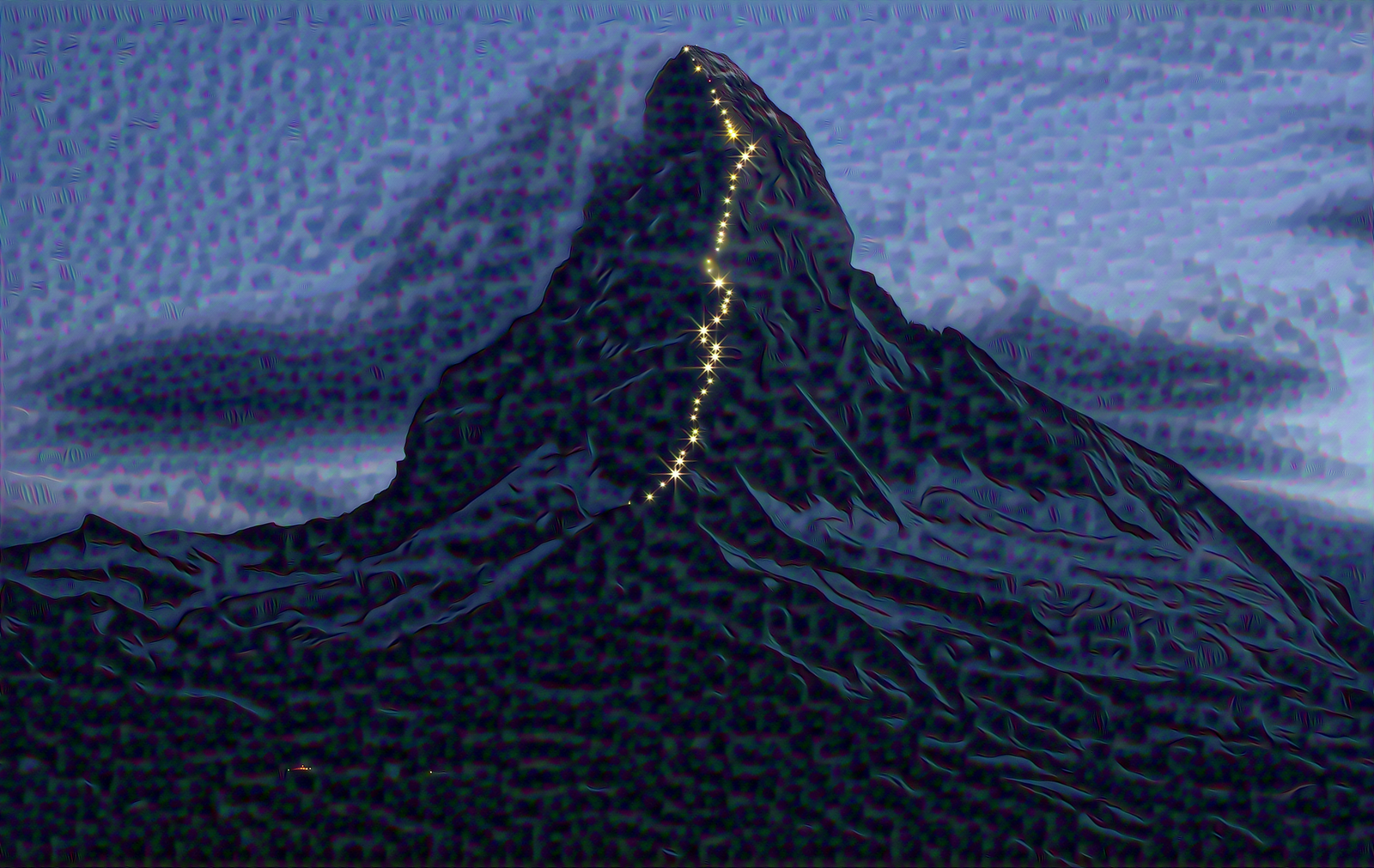

Here is a photo by Denis Balibouse of solar-powered lights along the Hoernli ridge on the Matterhorn in Zermatt, Switzerland.

This is it run through filter conv2/3x3.

pool3/3x3_s2

inception_4b/pool

inception_5a/pool_proj

Here is a photo by Marcelo Del Pozo of a man riding through the flames during the "Luminarias", a religious tradition that dates back 500 years, in Alosno, Spain.

This is it run through filter inception_3a/3x3.

pool3/3x3_s2

inception_4b/pool

inception_4d/pool_proj

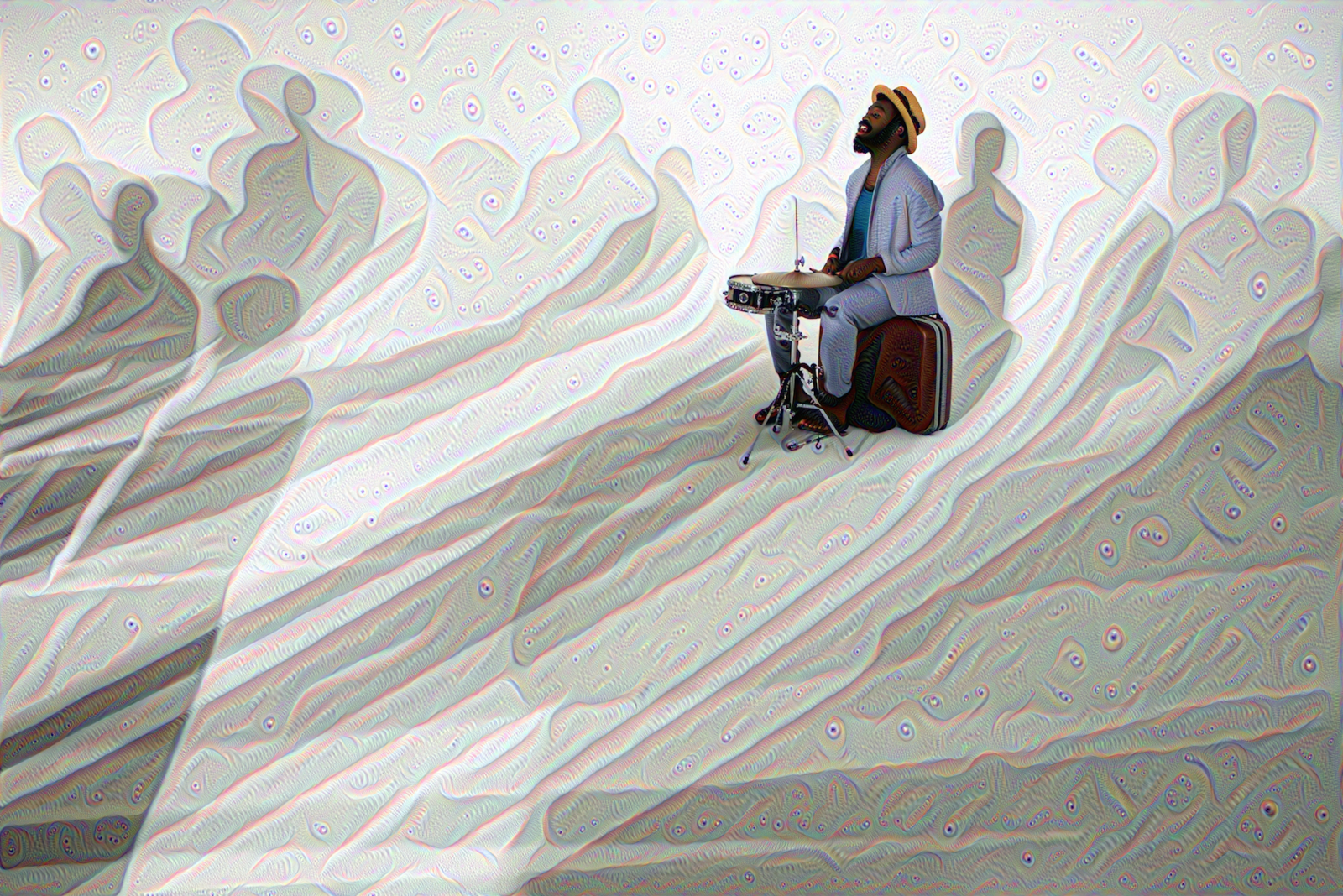

Here is a photo by Lucas Jackson of a drummer performing among the shadows of models during Men's Fashion Week in New York.

This is it run through filter conv2/3x3.

inception_3a/3x3

inception_3b/output

inception_4d/pool

Here is a photo by Olivier Morin of Gianni Fontanesi, project coordinator of Nemo's Garden, checking immerged Biospheres in Noli, Italy.

This is it run through inception_3b/output.

inception_4b/pool

inception_4c/pool_proj

inception_5a/pool_proj

Here is an image by Lucas Jackson of lightning streaking across the sky as lava flows from a volcano in Eyjafjallajokul, Iceland.

This is it run through filter inception_3a/3x3.

inception_3b/output

inception_4b/pool

inception_4c/pool_proj

Here's how we did it:

You'll need to be comfortable on the Linux or OS X command line to set this up. We configured deepdream using image-dreamer, then modified the Vagrantfile to let it use all the CPU and RAM the machine was able to give, as we found the size of our input images drastically affected the processing power required.

The shell scripts we used to see which images "dreamed" most effectively (one filter, many images), and which filters in the set were the most interesting (one image, many filters), were very useful. You're welcome to use them as you wish.

This user on Imgur provides a useful reference to the available filters if you'd like to save time!

You can visit the full gallery Google introduced deepdream to the world with here.