If you’ve ever wanted to find out where heat is leaking from your house, or locate the warmest seat on the train (for some reason), you’ll need to use a thermal imaging camera. You could spend hundreds of dollars on a commercial one, but making your own is more affordable and more fun.

A lesser known capability of very expensive professional thermal cameras is their ability to detect and visualize chemical leaks. At the Open Lab, I recently set out to see if I could build a natural gas sensing camera using a new module from a small company in Germany. If their performance promises were to be believed, and my back of the envelope calculations were anywhere close to correct, it just might work. Unfortunately, their sensor was noisier than I was hoping, and the camera is not quite up to the task of gas sensing. Instead, I am releasing what I have built as Thermografree, an open source forward looking infrared (FLIR) thermal camera, and one of the first open source, affordable, and usably high resolution thermographic camera available. If you want to jump straight to building your own, you can find documentation on GitHub.

Like all infrared cameras, Thermografree is capable of measuring the temperature of distant objects, which can be used for applications from building efficiency analysis to search and rescue. While an open source thermal camera is new, it’s not necessarily novel. (Though perhaps Richard Stallman can finally check his house for air leaks.) However, unlike most consumer infrared imagers, the sensor used in Thermografree is capable of imaging many more varieties of infrared light. This potentially enables a variety of specialized applications, including gas sensing.

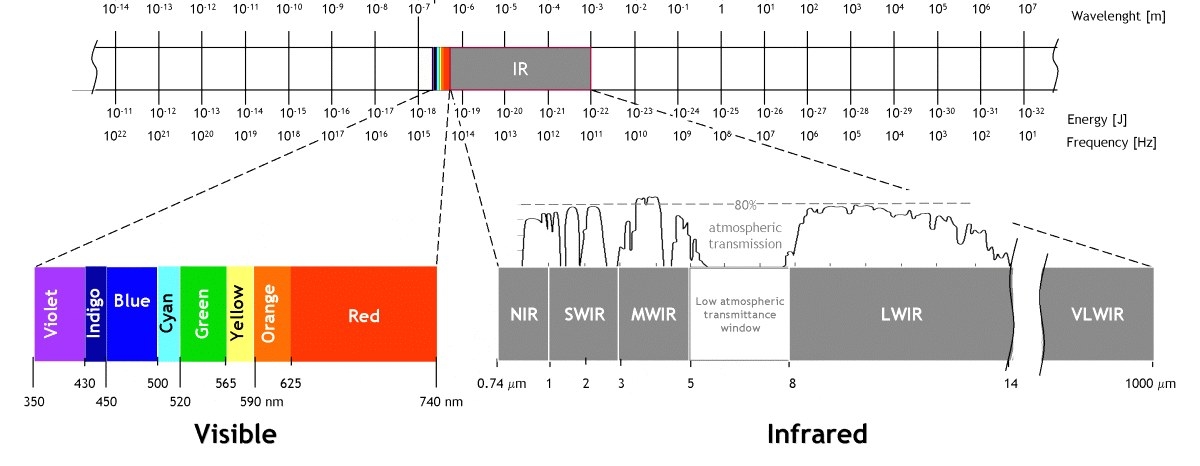

To understand how this works, a refresher on light and color might be helpful. Light includes not only the colors of visible light we know and love, but also near-infrared light (NIR), which is invisible to the human eye but behaves like visible light, and long wave infrared light (LWIR), which we experience as heat.

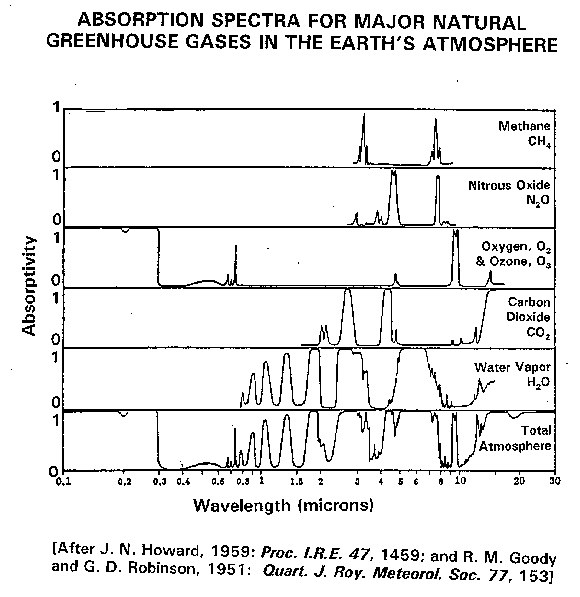

And about halfway between near-infrared and long wave infrared sits the mid wave infrared (MWIR) spectrum. Methane, carbon dioxide, benzene and many other important gases absorb specific frequencies of light within this range.

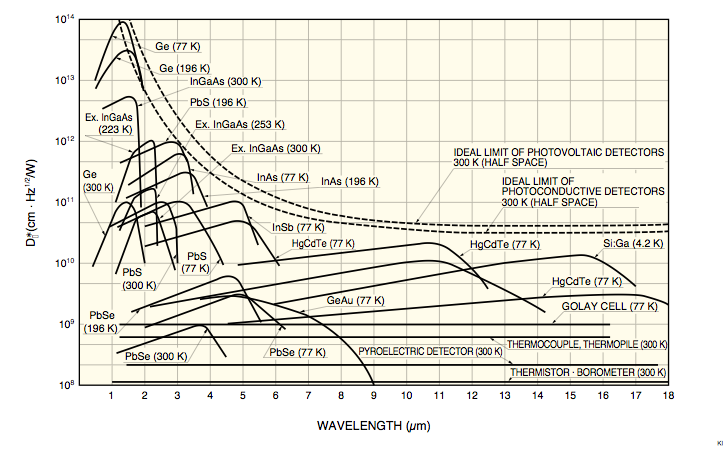

Most commercially available thermal imaging cameras only detect long wave infrared — that is, wavelengths between 8 and 14 microns — and aren't impacted by these gases. But an infrared camera sensitive only to mid wave infrared, in narrow bands between 3 and 5 microns, would show invisible gas plumes as dark clouds. Commercial products are available to do exactly this, but they cost tens of thousands of dollars, putting them outside the reach of many journalists and community organizations that might be interested in documenting atmospheric leaks.

Why methane?

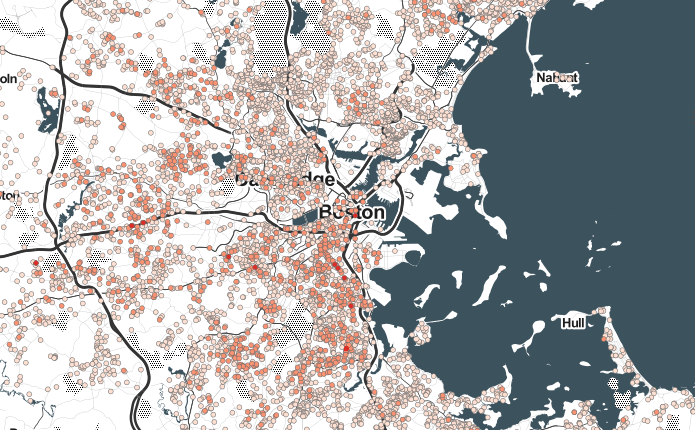

In Massachusetts, where I moved from, there are over 20,000 natural gas leaks, a result of aging infrastructure, weather damage and construction. While most leaks are pretty small, some, called “super-emitters,” can be very significant. This matters because natural gas, which is mostly methane, is a potent greenhouse gas, trapping up to 100 times more heat in the atmosphere than carbon dioxide.

Knowing a leak exists isn’t enough to get it repaired — activists in Boston recently held a birthday party for a leak that has been documented for 30 years — but it is a start. We tend not to think about what we can’t see, even if it has an impact on our infrastructure, health, and environment. Invisible gas leaks are easy to ignore.

A methane sensitive camera could also detect certain volatile organic compounds as well, including benzene and other chemicals that enter the atmosphere in the process of producing, transporting and storing fossil fuels. Many of these gases are known carcinogens.

View this video on YouTube

A gas sensing camera can document other hydrocarbons as well, such as vapor leaking from this oil well.

By visualizing and documenting these safety and environmental impacts more directly, journalists could make it easier to understand the scale and impact of the issue on an emotional level as well as an intellectual one. But to do that, we’d need access to cheaper cameras.

Building a cheaper camera

Last fall, I saw an opportunity to make a camera that might work for gas detection at a fraction of the price of professional IR cameras. A small company in Germany, Heimann Sensor, announced a new line of thermopile arrays. Unlike other infrared sensors, thermopiles detect heat in a general way, and are not tied to a specific spectral band. And because they do not require cooling, they’re much easier to use in a DIY project. However, thermopile arrays are typically much less sensitive than the more conventional detectors. Perhaps though, this tradeoff would impose reasonable restrictions on the design and application space of a low cost device — a camera for documenting gas leaks rather than discovering them, for example.

Together with the Public Lab, I discussed and shared my thoughts and calculations about the feasibility of this device for gas sensing. My signal to noise investigation, based on information provided by Heimann Sensor, suggested that their thermopile array would be right on the edge of workable and impossible (plus or minus a few orders of magnitude).

I decided that the best way to test my conclusions would be to build a camera with the thermopile array and experiment with it in the real world.

I connected the IR module to a Raspberry Pi, and immediately after writing a driver to allow the two devices to communicate, I could see the first hints that the project might not actually work. The thermal image was significantly noisier than I had hoped, and the calibration data supplied by the factory did not seem consistent with the data from the sensor. Furthermore, to isolate the camera’s sensitivity to only the spectrum of infrared light absorbed by methane, the thermopile requires a bandpass filter. This filter would eliminate almost all of the light that would have reached the sensor, further increasing the noise. Indeed, after installing the filter, I found it impossible to resolve an image of room temperature objects, and gas remained invisible.

While this iteration didn’t yield a working gas camera, there are several next steps of investigation that could recover some of the device’s promise yet:

- Could Thermografree detect gas in very warm environments, or in places with lots of incident MWIR light?

- Could the sensor be cooled to reduce the noise?

- Could longer integration times, filtering, or image processing on the output recover a meaningful image?

- Could the broadband nature of the infrared sensor enable a different kind of application?

I will be investigating these options further, and you are welcome to follow along and help out by tracking the project and contributing to its progress on Github.

Technical details

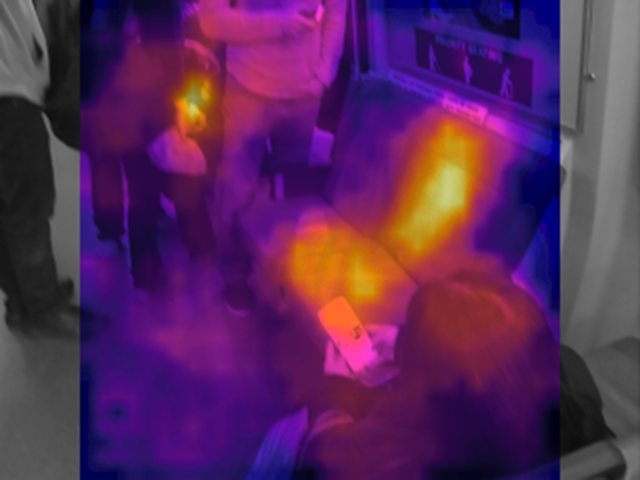

The Heimann infrared imaging module inside Thermografree is a unique, ultra-high bandwidth sensor. While most consumer thermal cameras detect infrared light in the 7 to 12 micron range, this sensor detects light from 2 to 16 microns, potentially allowing enabling filtering for specialized applications. The device has an infrared resolution of 32x32 pixels, smaller than FLIR™ Lepton’s 80x60 pixels, but the module cost is only around $50 -- far less than the $250 price tag of the FLIR™ Lepton module. Thermografree also contains a conventional visible light camera with the same field of view as the infrared imager, and enhances the perceived resolution by superimposing the thermal data on a visible image.

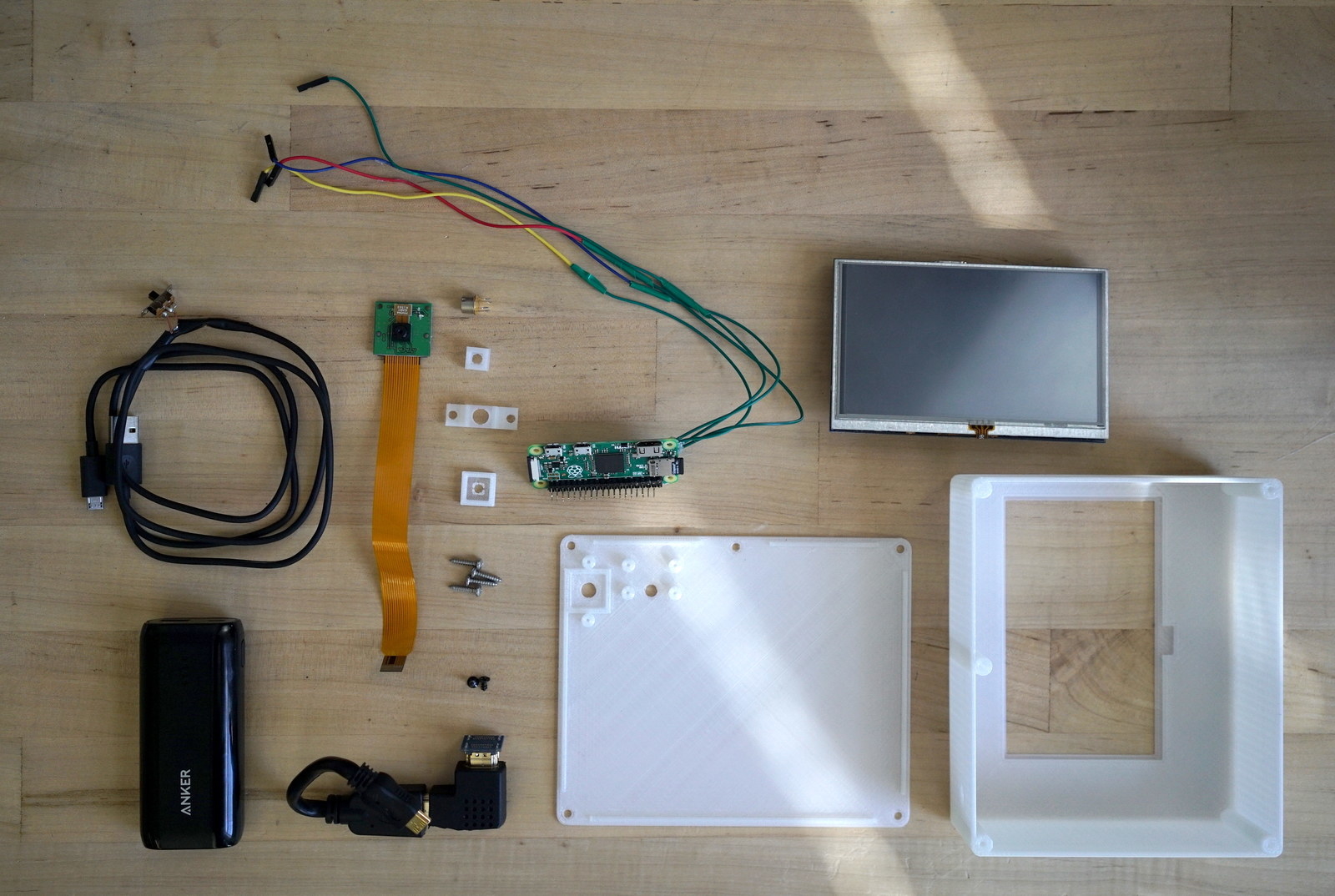

You can build an Thermografree camera yourself using a Raspberry Pi, the infrared sensor, a camera, and a few additional components. Build details, including STL files for 3D printing an enclosure and source code for the driver and GUI application are documented on the project’s Github repository.

Update, 2/6/2016, 11:26AM PST: Clarified sources for several images. Changed device name.

Open Lab for Journalism, Technology, and the Arts is a workshop in BuzzFeed’s San Francisco bureau. We offer fellowships to artists and programmers and storytellers to spend a year making new work in a collaborative environment. Read more about the lab.