Following FreeSpeech: Behind The Scenes In The Creation Of A New App For Language Learning

"In American culture, the currents of fear and compassion run side by side. It's easy for people to look over the boundary from "neurotypical" into "neurodiverse" - to come from a position of ability and peer into disability - and to feel sorry, to feel sad. It seems to be a lot harder to really enter into the world in which I work voluntarily [...] That means we have a lot of brilliant, educated, wealthy entrepreneurs who work in Silicon Valley and donate to education causes, but not a ton of them quit their jobs in Silicon Valley to move back to India and develop AAC because they feel compelled to serve."

Lucas Steuber and Ajit Narayanan in Portland, OR; June 6, 2015

It’s March 14, 2015, and I’m running late. That in itself isn’t a huge surprise; I’m usually at least a few minutes behind schedule. This was a special kind of late, though - the kind experienced by Silicon Valley first-timers who think it’s a good idea to arrange a meeting at Starbucks. The only thing more common than a Starbucks in the Bay area is a software engineer, and finding a software engineer at a Bay area Starbucks is a little like finding a needle in a pile of needles.

Ajit Narayanan, though, was easy to spot. In the field of Augmentative and Alternative Communication (think Stephen Hawking and his iconic robot voice), Ajit is something of a celebrity – an engineer who cracked a problem that linguists and speech pathologists like myself have been working on for ages: How to give access to language – real, compositional, natural language – to children who lack the symbolic understanding necessary to pair words with meaning. His efforts were met with a TED talk, an award presented to him by the President of India, recognition by MIT as one of the top worldwide innovators under 35, and the sort of “nerd cred” that transcends disciplines so thoroughly that hearing he’s in the United States warranted a last-minute plane ticket and a slightly-too-fast use of rental car.

We spoke for a while about some projects I wanted to work on together, discussed the existing products from his company (Avaz), and then he asked me if he could show me something interesting.

That was the first time I saw FreeSpeech.

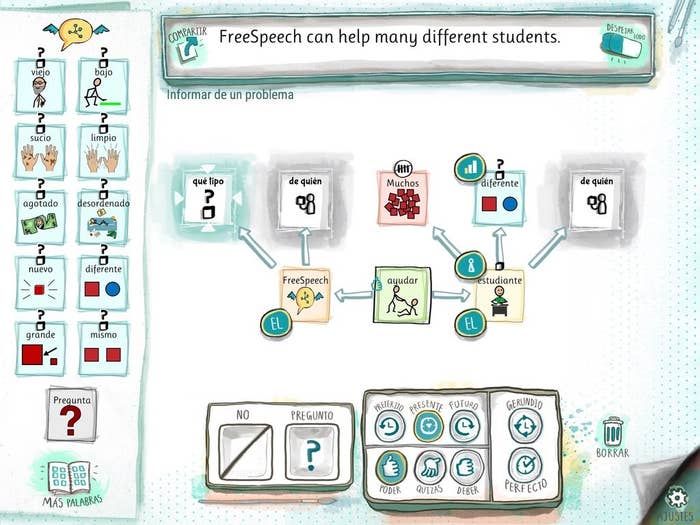

The FreeSpeech app as it exists today, composing from Spanish to English

To understand why this meeting was so important, let’s back up a bit. The field of Augmentative and Alternative Communication (AAC) isn’t a new one by any means. In fact, writing itself is a form of "alternative" communication in that it conveys meaning via a medium other than oral speech. That’s not the way that AAC is normally conceptualized, though; the image that comes to mind for most is sign language, or the device used by Stephen Hawking, or perhaps pictures exchanged to make requests in special education classrooms. AAC has positively impacted the lives of many people with disabilities, but one problem that has traditionally plagued it is that whatever we build is essentially both static and prescriptive in terms of what it allows people to say.

As an example: Say that a child with Autism enters kindergarten with no oral language. He or she may start out by exchanging pictures with their teacher to make requests or comments, and at some point move up to a “high tech” AAC device – these days usually in the form of an iPad with an app that uses a synthesized voice to speak preprogrammed words. A device may have the words “I,” “want,” and “snack,” but the student will learn to say “snack want.” That’s not a problem in terms of comprehension; we understand what they want, and often we give it to them. However, it is a problem for developmental literacy. Every time a child hears that phrase, it reinforces a misunderstanding of the structure of language that can interfere with their eventual ability to read and write - not to mention their ability to be understood.

Over the past 30 years, many different Speech-Language Pathologists and other educators have taken a stab at ways to address this. Most colleagues of mine acknowledge that it's a problem, but say that fixing it may be out of reach. I've been told many times that the structure of language is “too complex” to really quantify in a way accessible through AAC – which is really just code for “we don’t know how.” As the semi-rare combination of Speech-Language Pathologist and Applied Linguist, I see this problem as a big deal. I believe that both the beauty and strength of language lies in what Noam Chomsky called its “infinite generability:” The ability to say an unlimited number of things with a limited number of words. Without access to that generability, we're limiting children who use AAC in a subtle but insidious manner: We're allowing them to communicate, but only giving them access to what we want them to say. We've inadvertently hamstrung their power of creative self-expression.

Ajit Narayanan at TED, 2013

Back to the Starbucks. In 2013, Ajit delivered a TED talk on the concept behind what would become FreeSpeech. I had seen the talk before we met, but it had been almost two years since he gave it, and honestly I wasn't sure the idea would become reality. I was deep in clinical work day in and day out and heartbroken by the limitations I saw placed on the kids I served. I flew down to meet Ajit because I thought I had some ideas of how we could start doing better as an industry in the United States, and the radically different approach being taken in India - where Avaz was the only AAC developer for a billion people - held promise. I was looking for a tool that I could wield to forge change.

When we started working together, the field of AAC in the United States was simultaneously revolutionary and stagnant. Entrenched manufacturers of AAC - those who had been doing it since the 1970s or earlier - had missed the opportunity presented by the launch of the iPad, instead sticking to their dedicated, insurance-funded devices until shaken into motion by the rise of an upstart competitor called Proloquo2Go. Meanwhile, the rising ubiquity of iOS brought many new players into the field, but they often produced products that lacked any clinical evidence to support the decisions they were making. What was needed was the rare combination of tech savant and special educator, and if one were to draw a venn diagram of the groups "app developer" and "research linguist," the overlap is small indeed.

In American culture, the currents of fear and compassion run side by side. It's easy for people to look over the boundary from "neurotypical" into "neurodiverse" - to come from a position of ability and peer into disability - and to feel sorry, to feel sad. It seems to be a lot harder to really enter into the world in which I work voluntarily; almost all of my colleagues in Speech-Language Pathology are here for a reason (typically, past experience with a language disorder in a family member or friend). That means we have a lot of brilliant, educated, wealthy entrepreneurs who work in Silicon Valley and donate to education causes, but not a ton of them quit their jobs in Silicon Valley to move back to India and develop AAC because they feel compelled to serve.

I saw FreeSpeech in that Starbucks in March, and a few months later Ajit called and asked if I'd help to make it a reality - not just in promise, but in clinical practice. I got to help forge the tool and the change.

FreeSpeech forming a question from a group of picture tiles

FreeSpeech is different. It's unlike anything else that exists right now, and my first and most important responsibility along this journey has been to make sure that it works. Not "works" in terms of the engineering or other core science - fortunately, Ajit and his team have that down. I mean "works" in terms of actual intervention with kids.

There are hundreds of hours of video of me in the world now using this tool in therapy sessions, and of course the obvious truth is that not everything we built did work. A lot of brilliant ideas were left on the cutting room floor, and some things that I would never have expected ended up being the most important features. I wrote late-night emails discussing the precise shape and location of the garbage can. Some really thoughtful disagreements occurred around whether the app icon should have wings. The color and texture of the background? Very deliberate. Oh and - in case this is a surprise to anyone - Indian English and American English don't always match up. Who says they will "revert" to an email?

The core promise, though, has held true: To give children the ability to build meaning from their thoughts; to build grammatical sentences automatically from a jumble of picture tiles; to create a universal translator of sorts that constructs comprehensible language from the building blocks of raw intent. It works. I still can't always believe it, but it does - and we're nowhere near finished making it better.

It's March 3, 2016, and I'm running late. Again, I'm usually behind schedule - it's not a huge surprise. This is a special kind of late, though: The kind experienced by someone so happily busy, so happily overwhelmed that there couldn't have ever been adequate preparation. Yesterday Apple featured FreeSpeech in first place in "Best New Apps." Not the best of education, or of special education, or even of language learning, but as the best overall. That's a position never before occupied by any application intended for special education, and it's been a whirlwind since we first launched the app less than a month ago. I woke up this morning feeling fulfilled but somehow suspicious - wondering, as I did before, if I was doing enough, if we could do better.

Today I met with a student, a seven year old boy with Autism who has no oral language. For several years he's been using an AAC device, and I introduced him to FreeSpeech as we developed it to gather data on whether it was effective for him (it's really not intended as an AAC app on its own - rather a complement to language acquisition for any child). As we worked he used his device and found the word "airport" but then became frustrated, seemingly unable to find the words he needed. He reached over and took my iPad instead and used FreeSpeech to write "the airport doesn't have water" and then bounded away laughing happily. I thought he was just playing with the app up until they were leaving and I asked his mother. They had been to the airport. The water actually was broken in the restroom. He wanted to tell me that - he couldn't at first because he was limited by the tool he had, but a new tool freed him.

We can do better, and we will do better - keep watching. I promise it. But today I found out that the airport doesn't have water, and I'll take that as a win.

__________

Lucas Steuber, MA-T MS CCC/SLP, is the Director of Clinical R&D for Avaz, Inc. and the CEO of LanguageCraft, a private practice and consulting company in Portland, OR. He helped to create the new iOS app FreeSpeech, which can be found on the app store here.

Become a Community Contributor.