YouTube has allowed videos promoting misinformation about the coronavirus pandemic to be viewed by millions of people, it can be revealed, leading campaigners to demand emergency legislation to remove “morally unacceptable” conspiracy theories from the platform.

Videos falsely claiming that coronavirus symptoms are actually caused by 5G phone signals or that it can be healed by prayer — as well as claims that the UK government is lying about the danger posed by the virus — have been viewed 7 million times, according to an analysis by the Center for Countering Digital Hate that has been shared with BuzzFeed News.

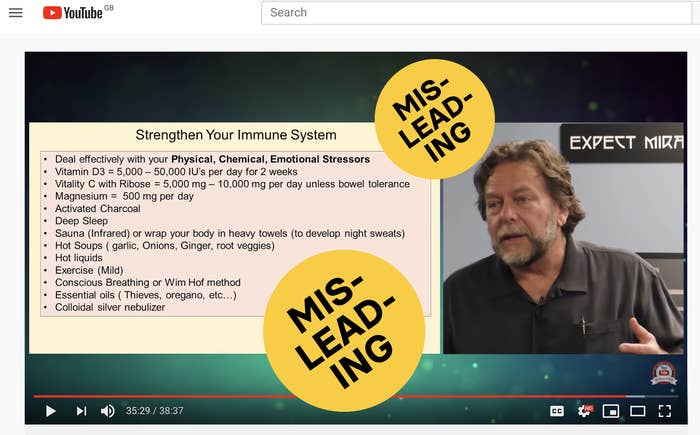

A YouTube account belonging to an American chiropractor called John Bergman has produced a series of videos on COVID-19 amassing more than a million views.

Bergman advocates using “essential oils” and vitamin C to treat the disease, against medical advice.

In another video, he falsely claims that hand sanitiser causes “hormone disorders, cancer, heart disease, and diabetes … weakens the immune system, plus it doesn’t work”.

This directly contradicts the principal medical advice from the UK's National Health Service and the US Centers for Disease Control and Prevention, which urge people to wash their hands regularly and use hand sanitiser if soap and water is not available.

Bergman did not respond to a request for comment from BuzzFeed News.

Three videos promoting the conspiracy theory that the symptoms of COVID-19, the disease caused by the coronavirus, are caused by 5G mobile signals have been viewed over 420,000 times.

One video, titled “Coronavirus Caused By 5G?”, was produced by an anti-vaccination group active on YouTube since 2006. It has been viewed nearly 300,000 times.

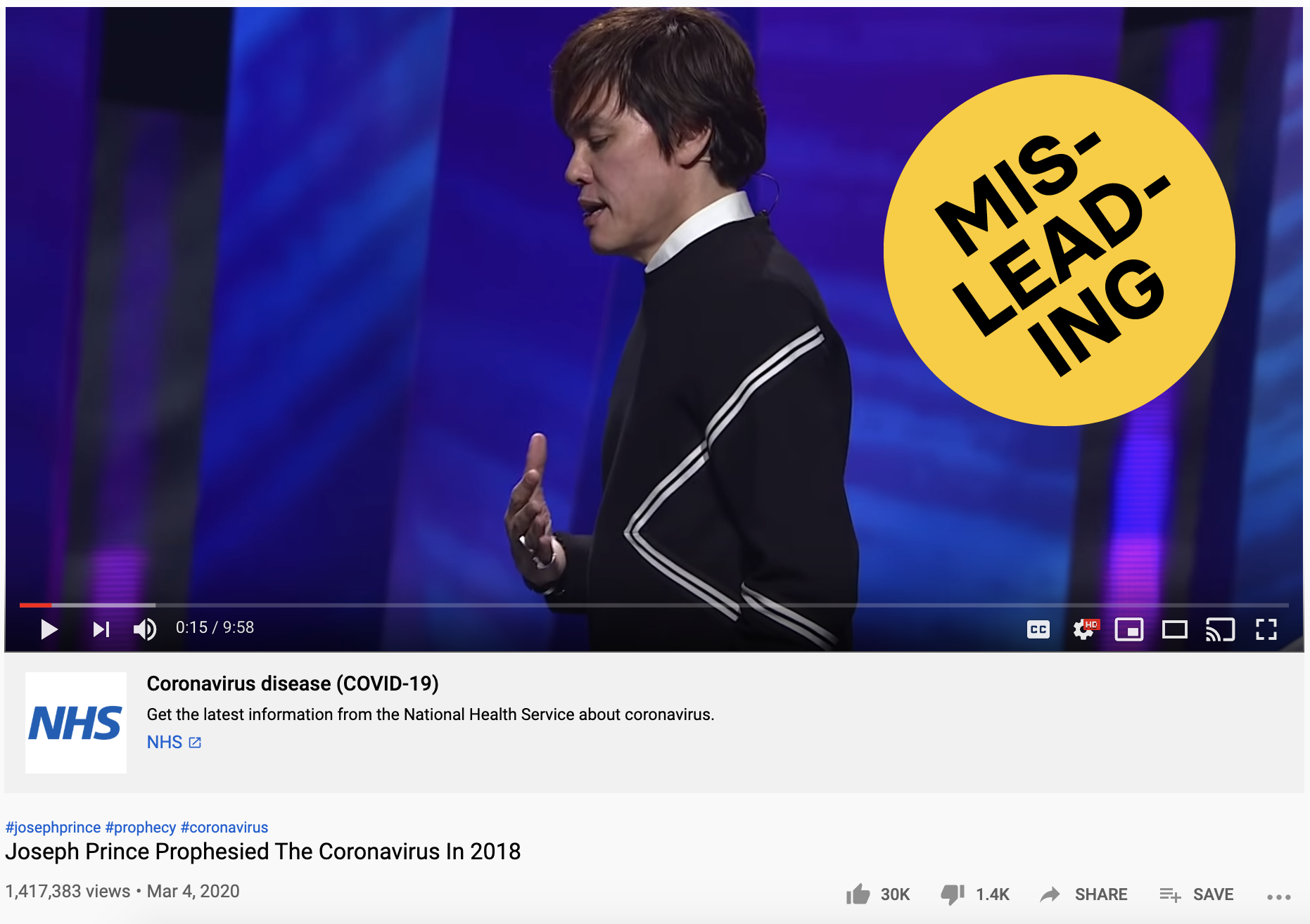

Fundamentalist religious YouTube channels are another major source of misinformation on the platform.

One of YouTube’s most popular videos about coronavirus, produced by a Christian preacher called Joseph Prince, claims the virus can be defeated by the power of prayer.

It includes a story suggesting the devil is encouraging people to quarantine, but that people won’t contract the virus if they break self-isolation to attend religious ceremonies.

The video has been viewed 1.4 million times. Prince did not respond to a request for comment.

YouTube videos produced by the notorious conspiracy theorist David Icke claim that COVID-19 was artificially created as part of a conspiracy to install an “Orwellian” government. His coronavirus videos have been viewed a total of 686,000 times.

Icke has also inserted adverts for a firm called “NoHypeInvest” in all of his videos on COVID-19, circumventing measures intended to prevent YouTube channels from profiting from the spread of COVID-19 misinformation.

Imran Ahmed, CEO of the Center for Countering Digital Hate, told BuzzFeed News: “It is morally unacceptable for YouTube to continue hosting content with dangerous false claims about COVID-19.

“When it comes to health misinformation, inaction has a cost in lives. If YouTube will not show the will to act on their own claims to be dealing with the routine use of their service to spread misinformation, the government needs to consider immediate action, including emergency legislation to allow them to prosecute executives, levy fines, and force them to fund counter-misinformation work.”

There is often a lot more misleading content on YouTube than on traditional social networks like Facebook and Twitter, said William Dance, a linguistics and disinformation expert at Lancaster University.

“One reason for this is because YouTube links can be shared and amplified on other platforms, making the reach they have much wider,” he told BuzzFeed News. “When it comes to health-related conspiracies and misinformation, YouTube is arguably the worst-affected platform.”

With so much coronavirus content being posted online and both our understanding of the virus and the situation on the ground changing so quickly, social media companies will be making extensive use of artificial intelligence rather than human moderation, according to Chloe Colliver of the Institute for Strategic Dialogue.

“We are sure to see more mistakes made that allow dangerous hoaxes and hateful conspiracy theories slip through the net, or where legitimate reporting or health advice is removed” she said.

Rather than explicitly seeking out videos like this by searching, research shows a large proportion of viewing time on YouTube is driven by recommendations.

Dance said this can mean “users find themselves in an echo chamber propped up by an algorithm that confirms and validates their conspiratorial beliefs”.

“Platforms are not only enabling the spread of false and dangerously misleading information, but enabling bad actors to profit from such content and activity," said Colliver.

“It is extremely difficult for researchers to understand the potential danger and impact of disinformation on YouTube, due to the lack of transparency and data access afforded by the platform.”

A YouTube spokesperson told BuzzFeed News: "At this critical time, we're working closely with health authorities to ensure users see the most authoritative information from WHO and NHS through information panels before they see anything else.

“We understand information is one of the most powerful tools in combating coronavirus and we are working to raise trustworthy information and combat misinformation.

“We also have clear policies that prohibit videos promoting medically unsubstantiated methods to prevent the coronavirus in place of seeking medical treatment, and we quickly remove videos violating these policies when we become aware of them."