Facebook, Twitter, and other social media sites should be fined if they fail to remove illegal content within a given timeframe, a committee of MPs has said.

In a highly critical report, the home affairs committee said social media companies were "shamefully far" from tackling dangerous and hateful content.

The committee is calling on the government to bring in new laws to penalise companies that fail to act, and wants the sites to contribute financially towards the cost of policing to do so – comparing the situation to a football team that pays for policing in its stadium on match days.

In their report, MPs on the committee said it was "completely irresponsible" of some of the biggest social media companies not to be more proactive in tackling hate speech and other dangerous or illegal content online, given their size, resources, and technology.

MPs found repeated evidence of illegal material not being removed after it had been reported, including terror recruitment videos on Facebook from jihadi and neo-Nazi groups (despite being reported by the committee itself), anti-Semitic attacks made against MPs, and material encouraging child abuse or sexual images of children.

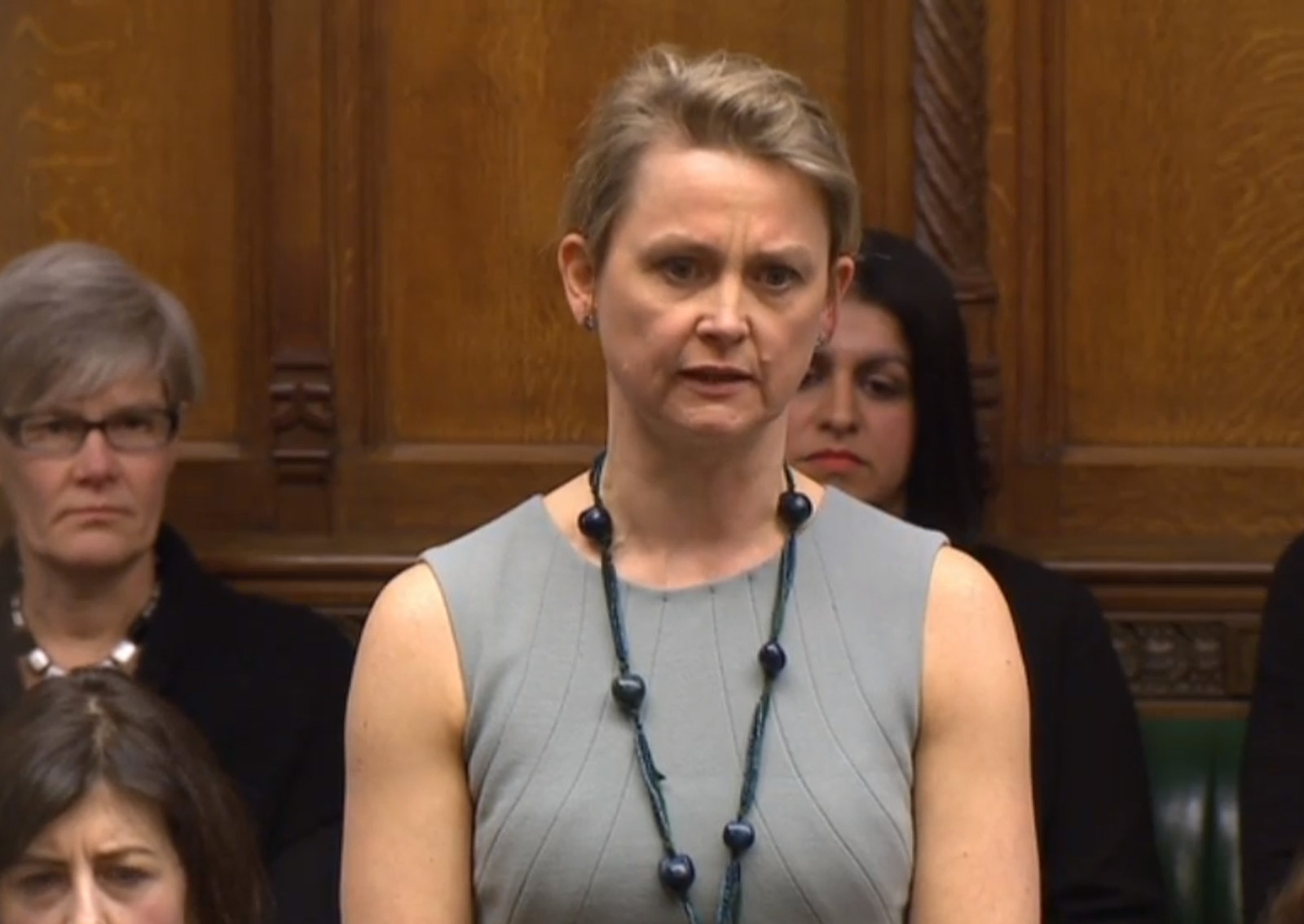

Committee chair Yvette Cooper, the former shadow home secretary, said the failure of sites such as Facebook and Twitter to deal with dangerous material online was a "disgrace".

"They have been asked repeatedly to come up with better systems to remove illegal material such as terrorist recruitment or online child abuse,” she said. “Yet repeatedly they have failed to do so. It is shameful. These are among the biggest, richest and cleverest companies in the world, and their services have become a crucial part of people's lives. This isn't beyond them to solve, yet they are failing to do so."

Cooper said social media sites were operating as "platforms for hatred and extremism", but were not even taking "basic steps" to quickly stop illegal material being posted or shared.

She added: “The government should also review the law and its enforcement to ensure it is fit for purpose for the 21st century. No longer can we afford to turn a blind eye.”

The original scope of the home affairs committee's inquiry included wider issues of hate crime, not just limited to online spaces, but it was unable to fulfil the full remit due to the general election being called and parliament's imminent dissolution. The committee said it hoped its successor would return to the topic in the next parliament.

When contacted for comment, a spokesperson for Twitter pointed to the company's latest transparency report that said 74% of terrorist accounts were removed through Twitter's own technology, while 2% of accounts were reported by police.

Between the beginning of August 2015 and the end of 2016, Twitter said it had suspended 636,248 accounts for promoting terrorism.

Simon Milner, Facebook's director of policy, said in a statement: “Nothing is more important to us than people's safety on Facebook. That is why we have quick and easy ways for people to report content, so that we can review, and if necessary remove, it from our platform.

“We agree with the committee that there is more we can do to disrupt people wanting to spread hate and extremism online. That’s why we are working closely with partners, including experts at Kings College, London, and at the Institute for Strategic Dialogue, to help us improve the effectiveness of our approach. We look forward to engaging with the new government and parliament on these important issues after the election.”