The Smarter Lunchrooms Movement, a $22 million federally funded program that pushes healthy-eating strategies in almost 30,000 schools, is partly based on studies that contained flawed — or even missing — data.

The main scientist behind the work, Cornell University professor Brian Wansink, has made headlines for his research into the psychology of eating. His experiments have found, for example, that women who put cereal on their kitchen counters weigh more than those who don’t, and that people will pour more wine if they’re holding the glass than if it's sitting on a table. Over the past two decades he’s written two popular books and more than 100 research papers, and enjoyed widespread media coverage (including on BuzzFeed).

Yet over the past year, Wansink and his “Food and Brand Lab” have come under fire from scientists and statisticians who’ve spotted all sorts of red flags — including data inconsistencies, mathematical impossibilities, errors, duplications, exaggerations, eyebrow-raising interpretations, and instances of self-plagiarism — in 50 of his studies.

Journals have so far retracted three of these papers and corrected at least seven. Now, emails obtained by BuzzFeed News through public information requests reveal for the first time that Wansink and his Cornell colleague David Just are also in the process of correcting yet another study, “Attractive names sustain increased vegetable intake in schools,” published in Preventive Medicine in 2012.

The most recent retraction — a rare move typically seen as a black mark on a scientist’s reputation — happened last Thursday, when JAMA Pediatrics pulled a similar study, also from 2012, titled “Can branding improve school lunches?”

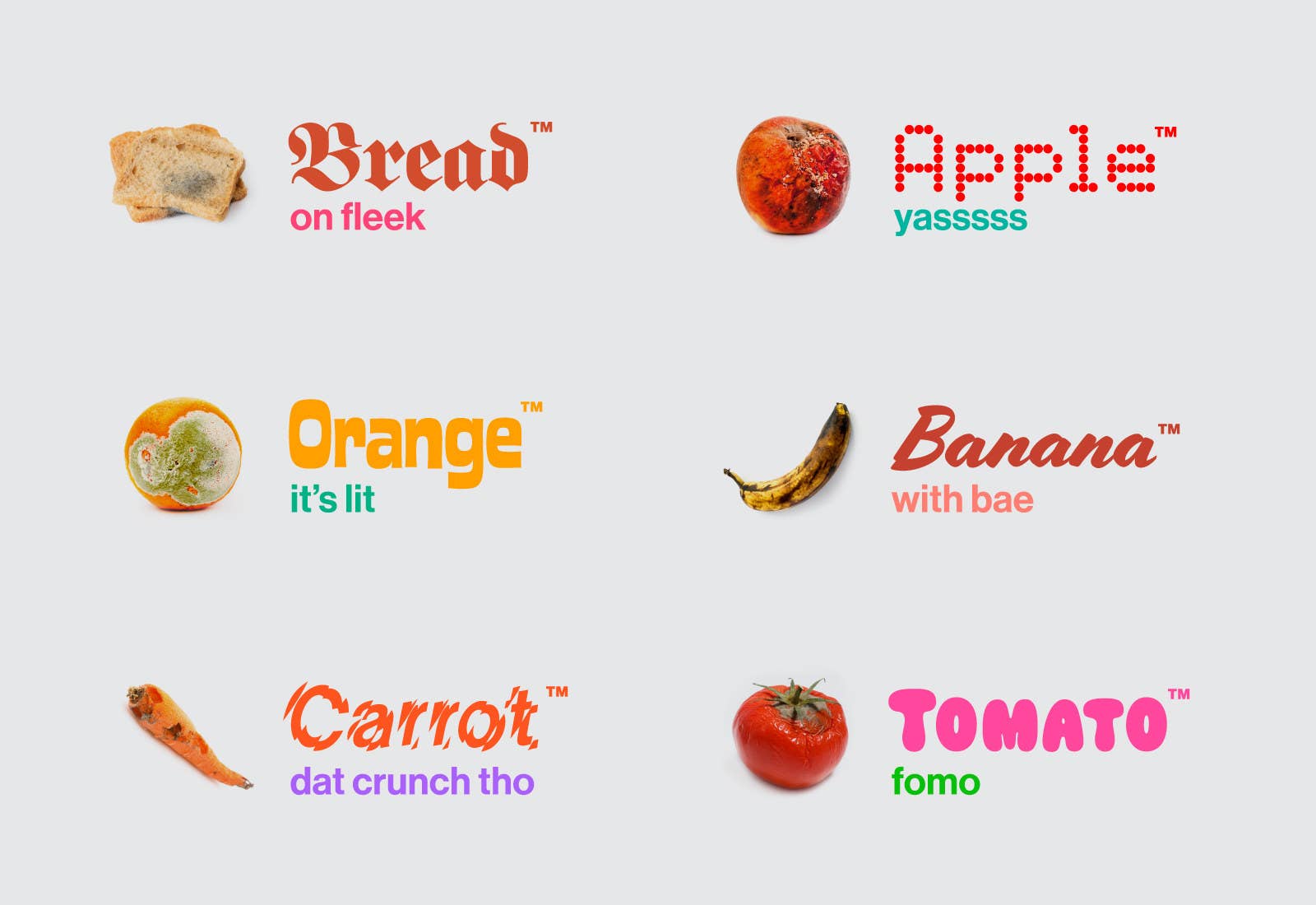

Both studies claimed that children are more likely to choose fruits and vegetables when they’re jazzed up, such as when carrots are called “X-Ray Vision Carrots” and when apples have Sesame Street stickers. The underlying theory is that fun, descriptive branding will not only make an eater more aware of the food, but will “also raise one’s taste expectations,” as the scientists explained in one of the papers.

The studies have been cited more than 75 times by others, according to Web of Science, and were funded by a grant of nearly $99,000 from the Robert Wood Johnson Foundation’s Healthy Eating Research program. The foundation told BuzzFeed News it hasn’t awarded him any grants since then.

The two studies have also been touted as evidence for the Smarter Lunchrooms Movement, cofounded by Wansink and Just in 2010. It promotes “simple evidence-based strategies” to encourage students to make healthy choices, participate in federally subsidized lunch programs, and waste less food. The USDA has funded $8.4 million in research grants related to the program to date, according to an agency spokesperson. Since 2014, it’s also awarded nearly $14 million in training grants. Almost 30,000 schools have adopted those techniques, and the government pays each one up to $2,000 for doing so. (The program says it’s also funded by Target and Wansink’s Cornell lab.)

One of the program’s recommendations is that school cafeterias feature a fruit or vegetable of the day and label it with “a creative, descriptive name.” Suggestions include “orange squeezers,” “monkey phones (bananas),” “snappy apples,” “cool-as-a-cucumber slices,” and “sweetie pie sweet potatoes.” Branding food in this way “can increase consumption by over 30%,” according to the program’s website. As proof, the Smarter Lunchrooms Movement cites the JAMA Pediatrics and Preventive Medicine studies, among others.

The USDA told BuzzFeed News that it has been talking to Cornell’s Center for Behavioral Economics in Child Nutrition Programs (the BEN Center), which administers the Smarter Lunchrooms Movement, about some of the allegedly flawed research. The agency “believes that scientific integrity is important, and that program and policy decisions should be based on strong evidence,” wrote USDA spokesperson Amanda Heitkamp.

“We have discussed these concerns with the BEN Center, and they have plans to address them in consultation with the Cornell University Office of Research Integrity and Assurance,” Heitkamp said. But the evidence behind the Smarter Lunchrooms Movement, she noted, comes from other work as well. “Smarter Lunchroom strategies are based upon widely researched principles of behavioral economics, as well as a strong body of practice that supports their ongoing use.”

A spokesperson for the Smarter Lunchrooms Movement echoed this sentiment, pointing to studies done by non-Cornell researchers that support the program’s strategies.

Cornell and Wansink did not return requests for comment, and Just declined to speak with BuzzFeed News. In previous public comments, Wansink dismissed some of the errors as minor and inconsequential to the studies’ overall conclusions. He also claimed that his studies have been replicated by other researchers. “One reason some of these findings are cited so much is because other researchers find the same types of results,” he told Retraction Watch in February. In March, he told the Chronicle of Higher Education that field studies should be taken with a grain of salt, as opposed to research done in a controlled setting like a laboratory. “Science is messy in a lot of ways,” he said.

But his critics take these problems very seriously, pointing out how rapidly his research has been adopted into the real world.

“It’s not sufficient evidence to roll out interventions in thousands of schools, in my opinion,” said Eric Robinson, a behavioral scientist at the University of Liverpool, who has found that several of Wansink’s studies cited by the Smarter Lunchrooms Movement made the strategies sound more effective than the data showed.

Others are disappointed that Wansink has, by and large, failed to adequately address most of the alleged mistakes — particularly when the entire field of psychological research is being dissected for studies that fail to hold up in repeat experiments.

Tim van der Zee, a graduate student at Leiden University in the Netherlands and one of the first researchers to spot errors in Wansink’s work, said that aside from correcting and sharing data for a handful of the challenged papers, the professor, his coauthors, and Cornell “remain inexplicably hidden in silence.”

“One of the fundamental principles of the scientific method is transparency — to conduct research in a way that can be assessed, verified, and reproduced,” he told BuzzFeed News. “This is not optional — it is imperative.”

Wansink began drawing scrutiny last November when, in a now-deleted blog post, he praised a grad student for taking the data from a “failed study” of an all-you-can-eat Italian lunch buffet and reanalyzing it multiple times until she came up with interesting results. These findings — that, for example, men overeat when women are around — eventually resulted in a series of published studies about pizza consumption.

To outside scientists, it reeked of statistical manipulation — that the data had been sliced and diced so much that the results were just false positives. It’s a problem that has cropped up again and again in social science research, and that a growing number of scientists are trying to address by replicating studies and calling out errors on social media.

Over the winter, van der Zee saw that Wansink’s blog post was accruing dozens of disapproving comments. He teamed up with two other scientists who were similarly intrigued: Nicholas Brown, also a graduate student in the Netherlands, and Jordan Anaya, a computational biologist in Virginia. At first they exchanged emails with Wansink about apparent errors in four of the pizza papers, van der Zee said. But when he stopped replying to them, they decided to go public with the 150 errors they’d found in the four papers. Then Andrew Gelman, a statistician at Columbia University, accused Wansink’s lab of manipulating the data — or using “junk science,” in his words — to dress up their conclusions.

These critiques soon captured journalists’ attention. In early February, Retraction Watch interviewed Wansink about his disputed work, and New York magazine declared that “A Popular Diet-Science Lab Has Been Publishing Really Shoddy Research.”

The next day, Wansink wrote an email to more than 40 friends and collaborators with the subject line “Moving forward after Pizza Gate.” He called the barrage of criticism “cyber-bullying,” and seemed to dismiss the errors, explaining that most stemmed from “missing data, rounding errors, and [some numbers] being off by 1 or 2.”

He told the other scientists that the mistakes didn’t change the conclusions of the four papers, and sought to reassure them that they were on the right side of history.

“For a group of people who are so innovative, so hard-working, and who try so tirelessly to make the world healthier, this could be disheartening,” he wrote. “Fortunately, we have too many other great ideas and solutions that keep our eyes fixed on the horizon in front of us.”

The horizon, as it turned out, was darker than he anticipated, as shown in dozens of emails between Wansink and collaborators who work at public universities, obtained via records requests by BuzzFeed News.

After helping to dissect the pizza papers, Brown turned his sights on the now-retracted “Can branding improve school lunches?”

The study claimed that elementary school students were more likely to choose an apple instead of a cookie if the apple had an Elmo sticker on it. The takeaway: Popular brands and cartoons could successfully promote healthy fare over junk food.

In a blog post, Brown expressed concern about how the data had been crunched, and confusion about how exactly the experiment had worked. He noted that a bar graph looked much different in an earlier version. And, he pointed out, the scientists had said their findings could help “preliterate” children — which seemed odd, since the children in the study were ages 8 to 11.

In yet more scathing blog posts, Anaya and data scientist James Heathers pointed out mistakes and inconsistencies in the Preventive Medicine study, “Attractive names sustain increased vegetable intake in schools,” which claimed that elementary school students ate more carrots when the vegetables were dubbed “X-ray Vision Carrots.”

Both papers were written by Wansink and Just, as well as Collin Payne, an associate professor of marketing at New Mexico State University. (Payne declined to comment.)

Wansink wrote to his coauthors and a few others who had helped with the papers on Feb. 21: “Back here in Ithaca we’re busy with a bunch of crazy stuff.”

“One of the things we’re facing is people challenging some of our old papers,” Wansink wrote. “What our critics want to do is to show there [sic] are bogus so they can challenge all of the Smarter Lunchrooms policies.”

But there was a problem: He couldn’t find the data for either study. “We can’t seem to find them,” he wrote. “Any chance you have them in any files.”

The following week, Brown blogged about several papers in which Wansink appeared to have plagiarized from his previous work, and New York magazine wrote about it. Wansink wrote an apologetic email to several deans at Cornell, trying to explain the “newest saga.” He admitted that there were duplications, but believed them all to be justified, saying at one point that certain paragraphs were “important enough to be repeated.”

“For someone who’s been a noncontroversial person for 56 years, this has been an upsetting month, and I’m ashamed of the difficulties it has given you, and our great Dyson School, College, and University,” Wansink wrote.

"All the numbers seem to be within one baby carrot of each other."

The drama was only beginning. On March 11, Robinson, the University of Liverpool scientist who has done similar research, wrote to Wansink with shockingly simple questions about some data. The number of children in the carrots paper, he pointed out, varied throughout the paper: Sometimes it was 147, other times 113 or 115. Plus, the number of carrots that the children put on their plates (17.1) didn’t equal the number they ate (11.3) plus the number they didn’t eat (6.7), as it should have.

Wansink responded: “As you know with your study about elementary school kids and carrots, not all end up in mouths or on the tray. Preschoolers are even worse. They spill them on the floor or they stick them in pockets. All the numbers seem to be within one baby carrot of each other. Still, if we can track this data down, we’ll be able to see if this was due to recording, rounding, or measurement.”

Robinson wasn’t satisfied. “The point the blog and then news coverage is getting at is that this looks very suspect,” he replied.

By then, the accusations were starting to have tangible effects on Wansink’s career. He had been co-organizing the Transformative Consumer Research Conference, scheduled for June, about how consumer behaviors affect social justice issues. But on March 7, his co-organizer Brennan Davis, an associate marketing professor at California Polytechnic State University, had some bad news. (Davis declined to comment.)

“TCR leadership has asked me to ask you how you would feel about stepping away from the TCR conference while you are dealing with everything,” he explained over text. “I’m very sorry to have to put this question to you.”

“Sure,” Wansink texted back. “I totally understand.”

In an email to Davis a week later, after Davis again apologized for “the way things have gone down with the TCR conference,” Wansink thanked him and mentioned that the crisis had made him reflect on his life’s purpose.

“For about the past 20 years,” he wrote, “my morning mediation [sic] begins with ‘Help me make millions of people healthier, happier, and closer to God.’ That’s going to continue, but it might take a different path.”

Davis was sympathetic. “I think the field needs some ‘shelter’ from this criticism,” he responded that day. “Instead of using their skills for good, to help people do better work, rather many of [sic] used their skills to tear people down. And that is senseless to me.”

On March 21, van der Zee decided it was time to formally keep track of all the issues that he and others were finding. “The Wansink Dossier” mushroomed into a list of dozens of allegedly faulty papers.

Soon the editor of JAMA Pediatrics, Frederick Rivara, emailed Wansink with a link to Brown’s critique of the Elmo apples study. “Upon reflection, we share several of the concerns outlined in the blog post,” Rivara wrote. In a later email, he wrote, “There is substantial missing data.” (Rivara declined to comment.)

Wansink consulted a few colleagues, including a public relations director at Cornell, to figure out how to respond. “I think I should send him a note,” he wrote. “This is data that we can’t seem to find.”

So began a mad scramble, with the researchers combing computers and old emails and contacting ex-colleagues in search of the original data.

Meanwhile, the matter of the “pizza papers” remained unresolved. On April 5, Cornell University said that an internal investigation had found errors, but not misconduct, in the four papers.

In a subsequent statement of his own, Wansink pledged to reform research practices in his lab. He had developed procedures to prevent errors from happening and a system to catalog anonymized data so it could be shared with outsiders, he explained. And he apologized for the pizza papers, saying that he’d submitted corrections to the journals in which they’d appeared. He’d also discovered that portions of some of his older papers had been republished elsewhere, he said, and had informed six journals of these duplications.

As for the Elmo apples study, Wansink and his colleagues eventually chalked the problems up to some missing data and coding errors. They exchanged a few rounds of explanations and reanalyses with the JAMA Pediatrics editor, but it wasn’t enough.

“The errors in your article are pervasive,” Rivara, the editor, wrote on May 30. “Thus, we are proceeding with a plan to retract and replace your original publication with a corrected version.”

The next week, Wansink published a correction to yet another study — also referenced by the Smarter Lunchrooms Movement — that suggested that, in a restaurant setting, changing a dish’s name could affect how people thought it tasted.

And the carrots study, too, was in jeopardy. The editor of Preventive Medicine, Eduardo Franco, had contacted Wansink to say that “a reader” had written to the journal with concerns about the paper, linking to both Anaya’s and Heathers’ blog posts. (Franco did not respond to a request for comment.)

The team appears to now be looking proactively for past missteps.

On June 13, Wansink sent Franco a correction, cc’ing Just and Payne, among other collaborators. It was, once again, the result of weeks of hustling to track down and reanalyze the original data. Numbers had been missing and statistical calculations off, Wansink wrote, but most importantly, “The reanalysis shows the results are strong and make the same conclusion.” (He would later note that Stanford University researchers arrived at a similar finding in a study of their own.)

Hours later, Payne privately replied to Wansink and Just. “This is very impressive--and a relief!”

A month later, Franco replied to inform them that the correction would appear in a future issue of the journal.

In a tumultuous year, it was relatively good news.

“I am thankful this morning that this one is not a retraction and replacement,” Payne wrote to Wansink. “You and your team do good work Brian.”

The team appears to now be looking proactively for past missteps. On June 30, Wansink emailed several collaborators to say that they should take a second look at a batch of papers about World War II veterans. One of those studies found that those who’d experienced heavy trauma, rather than light trauma, seemed to be less loyal to brands and more drawn to low prices while shopping. After receiving criticisms about the underlying data — that, for instance, “many of the people seemed to be born way after the war,” as Wansink wrote — he said he’d realized that some of the data entries for other papers were duplicates or “mismatched.” He suggested that they contact the journals to tell them they were aware of the problems and were going to reanalyze everything. Ideally, he told the group, they’d write a correction and “avoid the Veteran version of PizzaGate.”

In retracting their JAMA Pediatrics apples study last week, Wansink and his coauthors admitted to having incorrectly described the experiment’s design and number of students involved, used an “inadequate” data analysis method, and mislabeled the graph. But, they said, their conclusions remained intact.

Brown, however, has already spotted problems in the revised paper that went up in its place. ●