Reddit is purging Nazi, white supremacist, and other hate-based groups from its site as part of a new policy change announced Wednesday that targets and bans certain violent material.

The popular discussion site, which has struggled with how to monitor and remove vitriolic and offensive content in the past, said in a statement that it had decided to retool certain rules and regulations that were "too vague" and ban material that “encourages, glorifies, incites, or calls for violence or physical harm against an individual or a group of people.”

"We did this to alleviate user and moderator confusion about allowable content on the site. We also are making this update so that Reddit’s content policy better reflects our values as a company," Reddit told its nearly 542 million monthly users. "In particular, we found that the policy regarding 'inciting' violence was too vague, and so we have made an effort to adjust it to be more clear and comprehensive."

Here's the rest of the statement:

Going forward, we will take action against any content that encourages, glorifies, incites, or calls for violence or physical harm against an individual or a group of people; likewise, we will also take action against content that glorifies or encourages the abuse of animals. This applies to ALL content on Reddit, including memes, CSS/community styling, flair, subreddit names, and usernames.

We understand that enforcing this policy may often require subjective judgment, so all of the usual caveats apply with regard to content that is newsworthy, artistic, educational, satirical, etc, as mentioned in the policy. Context is key. The policy is posted in the help center here.

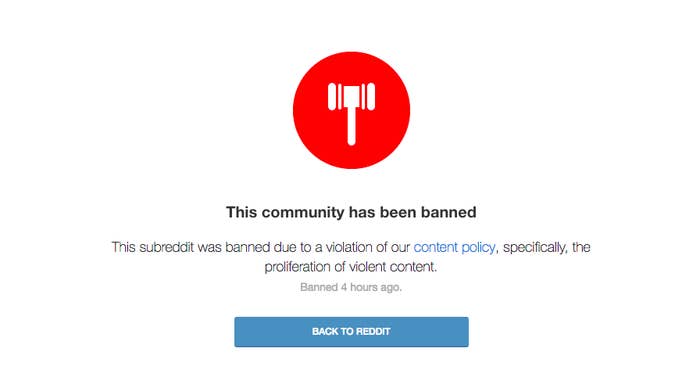

After the changes were published, users quickly pointed out that at least 10 subreddits pertaining to the alt-right and Nazism had been removed. Others, like r/racoonsareniggers and r/whitesarecriminals, were also deleted.

In a discussion with administrators, some users expressed confusion about the updated policy, and pushed moderators on why certain groups were removed and not others, such as the subreddit titled "watch people die" and comments in r/latestagecapitalism or r/fullcommunism "calling for sending a certain kind of people to a gulag or death."

Reddit administrator landoflobsters noted that many posts are borderline violent or hateful, and that "context is key." Hunting and BDSM communities, as well as news about violence and death, will "not be impacted by this policy," Reddit said.

When asked why the site, which gets 8 billion pageviews per month, decided to introduce this new policy now, a spokesperson told BuzzFeed News that "we strive to be a welcoming, open platform for all by trusting our users to maintain an environment that cultivates genuine conversation" and referred back to the company's original statement.

Policing and banning content is a controversial move for Reddit, which was created in 2005 as a platform for people to openly discuss and share whatever they wanted to without interference from site moderators. However, as its popularity exploded, so did subreddits that spewed violent, anti-Semitic, racist, and incendiary content.

Before he resigned in 2014, former Reddit CEO Yishan Wong vowed not to "ban questionable subreddits": "You choose what to post," he said. "You choose what to read. You choose what kind of subreddit to create.”

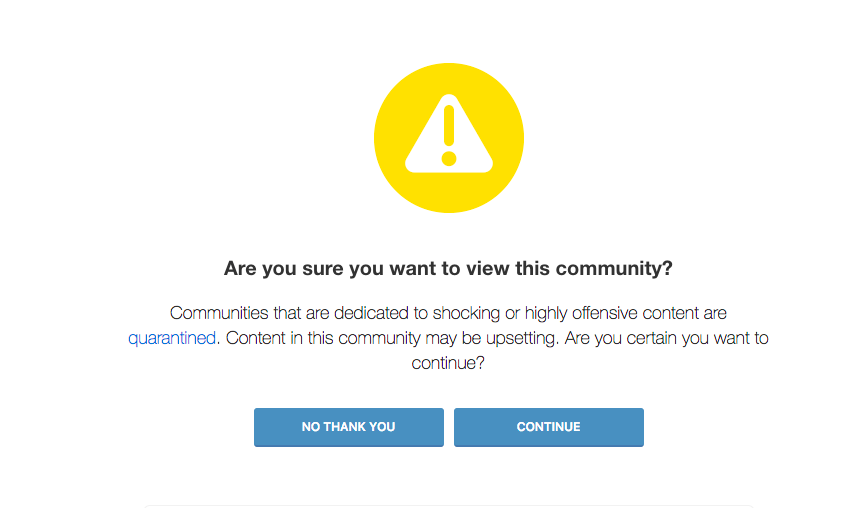

But shortly after Ellen Pao took over, the company cracked down on its toxic material and added new rules that would remove or quarantine rooms deemed too inappropriate for the average user. During her tenure, the company was embroiled in controversy over free speech and the firing of one of its most popular employees. Pao, who was attacked, harassed, and threatened by users, left the company after just eight months.

As to why Reddit again increased its efforts to patrol its content after hate speech on the site dropped more than 80% after the 2015 regulations. Steve Huffman, one of the site's original founders and its current CEO, will be answering questions about the move on the site's announcements board next week.