By now it’s clear: Political actors trying to sway the 2016 elections very likely tried to influence people, in part, by using fake but deceptive bot accounts on social media — including Twitter.

The company recently handed the House and Senate intelligence committees data on 200 accounts that Russian political actors may have used to meddle with people’s perceptions of divisive issues during the presidential campaign. Twitter also released a statement on bots and manipulation networks on their platform, and its leaders will likely appear before a congressional hearing. Seeing shared polarizing or fake information on US social platforms — which includes the use of bots — is becoming part of the fabric of online life, as the companies struggle to address it.

So what is a bot on Twitter? In the strictest sense of the term, it’s any account that’s automated — meaning they are controlled by a computer script rather than a person. Many bots are benign or even useful: think of poetry bot accounts that will tweet you haikus or bots that alert people about earthquakes. But they can be problematic when a person or a group uses a large number of them to influence political conversations or to spread misinformation.

“[Bots] have the potential to seriously distort any debate,” said Atlantic Council fellow Ben Nimmo, who has researched bot armies for years and who wrote a guide to spotting bots for the Digital Forensics Research Lab (DFRL). “They can make a group of six people look like a group of 46,000 people.”

There’s no surefire way to spot whether an account is a bot. But there are a number of different ways to look at Twitter accounts — mostly to determine whether their behavior resembles that of a human — that may help us gain a better understanding of the universe of automated user accounts. In short, bots tend to tweet more and in bursts, have automated or copied features in their profiles, and retweet a lot.

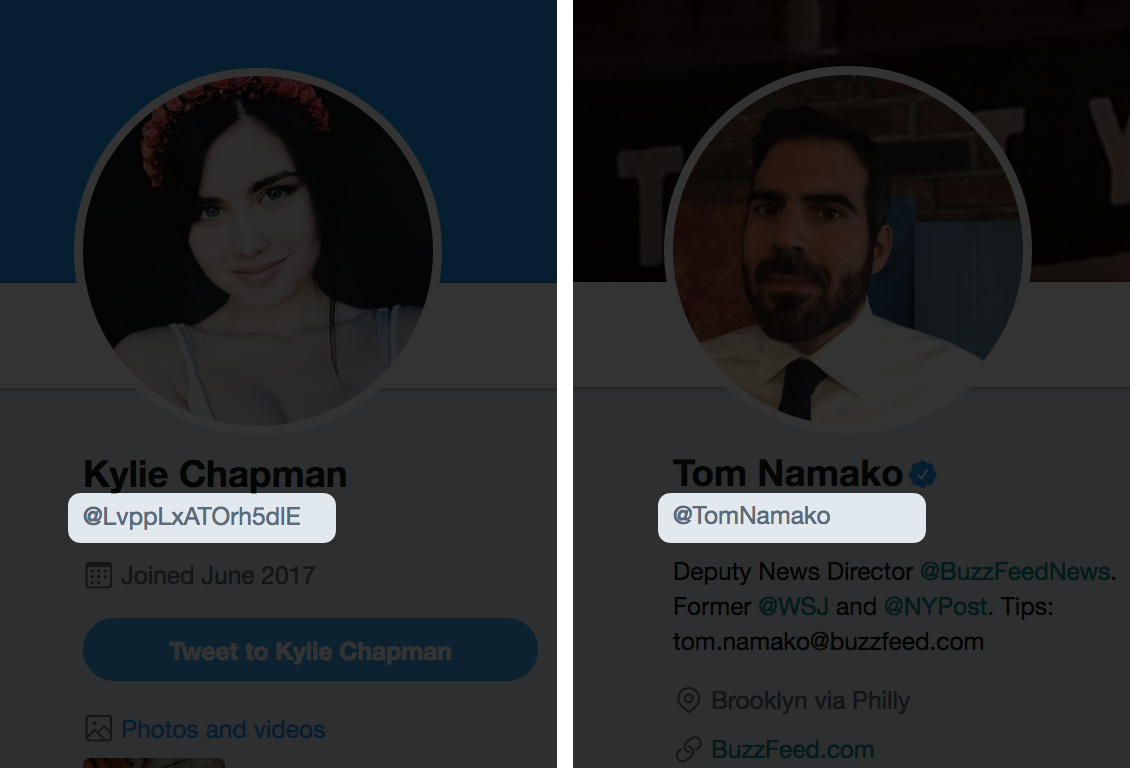

BuzzFeed News did a comparison between one of its own human editors’ Twitter data and the data of several accounts with bot-like activity to highlight their differences in personas and behavior.

BuzzFeed News looked at the Twitter data from Tom Namako, an editor on the Breaking News team with a very high — near bot-level! (scary, Tom) — level of activity and data from Twitter handles identified as automated accounts by the DFRL. (We also chose Tom to avoid using accounts that may seem overtly political, since the conversation around bots is, now, just that.)

Bots act in weird, erratic ways, but one telling indicator is the number of tweets an account posts.

According to the DFRL, tweeting 72 times a day is suspicious, and more than 144 tweets per day seems very suspicious.

The Twitter account @sunneversets100, which the DFRL identified as a bot, has been on Twitter since Nov. 11, 2016, and tweeted 202,763 times. This averages out to 630 tweets per day.

A BuzzFeed News analysis of the account’s last 2,955 tweets — the maximum tweets made available through Twitter — shows that the last time the account was active it was routinely blasting out a suspicious number of tweets, hitting 584 in one day. Then, it seems to have stopped in May of this year:

Note: Chart represents the last 2,955 tweets over 76 days.

Source: Twitter

@tomnamako’s last 2,955 tweets look more like this:

Note: Chart represents the last 2,955 tweets over 76 days.

Source: Twitter

This is the same number of tweets for both accounts. The bot’s tweets are concentrated over short bursts of time.

On April 14, when the bot tweeted more than 580 times, it posted 242 times over the course of an hour — that averages to a tweet every 24 seconds. On his busiest day, Namako tweeted 90 times in one day.

Here’s what that looks and sounds like for the bot, @sunneversets100, on its busiest hour on its busiest day. The size of the circle represents the number of tweets during each point in time. The majority of circles represent one tweet. There were several instances, however, when @sunneversets100 tweeted twice in one second:

Source: Twitter

Here’s the @tomnamako version of his busiest hour of his busiest day:

Source: Twitter

If a bot is not a person, then some of their profile information may give them away.

Another good indicator of authenticity is an account's personal information: this can include anything from identifying information like a ‘real sounding’ Twitter handle...

...to profile photos (a lot of automated accounts use the silhouette as their profile images — the new “egg” — the default avatar assigned by Twitter).

Slightly more sophisticated bots will feature photos stolen from the web like this one:

A simple Google reverse image search reveals that this image for another DFRL-identified bot, for instance, has been used at least four times by other Twitter accounts.

Some bots exist to boost other people’s tweets — so look at what an account is posting.

A closer analysis of @sunneversets100 reveals that or 99% of the 2,955 tweets BuzzFeed News looked at were not original, but rather retweets. For Namako, this rate is just above 40%.

@sunneversets100 mentioned more than 2,000 unique Twitter handles in its tweets, while the top 20 handles consisted largely of foreign news and individual writers. The most mentioned news organizations were @sputnikint, a state-funded Russian news outlet (265 times), followed by the Chinese state-run news organization @xhnews (125 times), Turkish news outlet @yenisafaken (60 times), a hardline conservative site loyal to President Recep Tayyip Erdoğan, and news channel @alarabiya_eng (50 times):

Source: Twitter

@tomnamako has mentioned more than 650 unique handles in his tweets and largely mentions reporters. Eighteen of @tomnamako’s top 20 most mentioned handles were reporters, editors and other accounts affiliated with BuzzFeed News. The other two were a New York Times reporter and President Donald Trump:

Source: Twitter

Over the years, bots have become more sophisticated. In part that’s because Twitter has found more ways to clamp down on them and, in part, that some of the technology behind them has improved.

“In 2010, we found a bunch of bots were promoting fake news. It was a political operative who was running” a web site and 10 bots, said Filippo Menczer, a professor at the Indiana University Network Science Institute who has studied bots for years and co-developed Botometer, a tool that measures hundreds of features to determine whether an account may be a bot or not.

The bots, he said, would tweet and link to a fake article and would mention an influential user, trying to get those users to spread the articles. The bot operators “knew that Twitter had [automated] mechanisms in place to boot some bots” based on the types links they posted. So to get around it they’d add random characters to their links to obfuscate them, he said, and “Twitter wouldn’t detect it.”

Today, he said, “if you wanna write a bot that doesn’t get caught you gotta be better than that.”

(All of the accounts identified by DFRL as bots didn't return requests for comment. Twitter also didn't return a request for comment.)

There are bots that are programmed to tweet below a specific frequency that Twitter may consider spam-like activity. There are bots — known as cyborgs — that are partly operated by real people to throw off algorithms trying to crack down on them. Accounts that were created years ago and laid dormant may be taken over by automated scripts and repurposed for commercial or political message boosting.

Bots now come in all shapes and forms, may have a lifespan of years or just minutes, and act alone or in unison with as many as 100,000 of them, said Nimmo from the DFRL.

“Is it legitimate to pose as someone else, to use someone else’s photo, to automate posting? At what point that is become illegitimate to automate posting?,” said Nimmo. “[Social media companies] need to break down the bot question into its component parts. What they need to do is put out publicly how they answer those questions.”