Earlier this month, after a public outcry over disturbing and potentially exploitative YouTube content involving children, CEO Susan Wojcicki said the company would increase its number of human moderators to more than 10,000 in 2018, in an attempt to rein in unsavory content on the web’s biggest video platform.

But guidelines and screenshots obtained by BuzzFeed News, as well as interviews with 10 current and former “raters” — contract workers who train YouTube’s search algorithms — offer insight into the flaws in YouTube’s system. These documents and interviews reveal a confusing and sometimes contradictory set of guidelines, according to raters, that asks them to promote “high quality” videos based largely on production values, even when the content is disturbing. This not only allows thousands of potentially exploitative kids videos to remain online, but could also be algorithmically amplifying their reach.

Raters told BuzzFeed News that in the past 10 days or so, they were assigned over 100 tasks asking them to make granular assessments about whether YouTube videos aimed at children were safe. “Yesterday alone, I did 50-plus tasks on YouTube regarding kids — about seven hours’ worth,” said one rater, who requested anonymity because they were not authorized to speak with BuzzFeed News about this work.

“As a parent, what upsets me a lot is that these videos aimed at kids are not really for kids,” the rater continued. “Content creators make these cartoons with fake versions of characters kids like, such as Paw Patrol, and then as you watch, they start using foul language, making sex jokes, hurting each other, and more. As so many children watch YouTube unsupervised, that kind of thing can be really scarring.”

View this video on YouTube

The URL to a video that should be considered "Not OK," according to recent task instructions for search raters evaluating videos on YouTube. "Many visually OK cartoons should be rated 'Not OK' for language, like this Peppa Pig video," the task instructions say.

The contractors who spoke to BuzzFeed News are search quality raters, who help train Google’s systems to surface the best search results for queries. Google uses a combination of algorithms and human reviewers like these raters to analyze content across its vast suite of products. “We use search raters to sample and evaluate the quality of search results on YouTube and ensure the most relevant videos are served across different search queries,” a company spokesperson said in an emailed statement to BuzzFeed News. “These raters, however, do not determine where content on YouTube is ranked in search results, whether content violates our community guidelines and is removed, age-restricted, or made ineligible for ads.”

YouTube says those content moderation responsibilities fall to other groups working across Google and YouTube. But according to Bart Selman, a Cornell University professor of artificial intelligence, although these reviewers do not directly determine what is allowed or not on YouTube, they still have considerable impact on the content users see. “Since the raters [make assessments about quality], they effectively change the ‘algorithmic reach’ of the videos,” he told BuzzFeed News.

"Even if a video is disturbing or violent, we can flag it but still have to say it’s high quality.”

“It is well-known that users rarely look beyond the first few search results and almost never look at the next page of search results,” Selman continued. “By giving a low rating to a video, a rater is effectively ‘blocking’ the video.”

“Think of it this way,” said Jana Eggers, CEO of the AI startup Nara Logics. “If a search result exists, but no one sees it, does it still exist? It's today's Schrödinger's cat. … [Ratings] impact the sort order, and that impacts how many people will see the video.”

YouTube did not respond to two follow-up inquiries about how a search rater’s evaluation of a video as highly relevant would affect its ranking. But according to task screenshots and a copy of the guidelines used to evaluate YouTube videos reviewed by BuzzFeed News, raters make direct assessments about the utility, quality, and appropriateness of videos. From time to time, they are also asked to determine whether videos are offensive, upsetting, or sexual in nature. And all these assessments, along with other input, build out the trove of data used by YouTube’s AI systems to do the same work, with and without human help.

Though YouTube said search raters don’t determine whether content violates its community guidelines, just this week, it assigned a task — a screenshot of which was provided to BuzzFeed News — asking raters to decide whether YouTube videos are suitable for 9- to 12-year-olds to watch on YouTube Kids unsupervised. “A video is OK if most parents of kids in the 9-12 age group would be comfortable exposing their children to it; otherwise it is NOT OK,” the instructions read. The raters are also instructed to classify why a video would be considered “Not OK”: if the video contained sexuality, violence, strong crude language, drugs, or imitation (that is, encouraging bad behavior, like dangerous pranks). The rater who provided the screenshot said they had not seen such a task before the recent wave of criticism against YouTube for child-exploitative content. They have been a rater for five years.

The rater also said the more specific examples for “OK” and “Not OK” that YouTube provided were unclear. For instance, according to the instructions reviewed by BuzzFeed News, an example of a violent video that is “Not OK” is Taylor Swift’s “Bad Blood” music video. Other examples that should be considered “Not OK” under the instructions include the cinnamon challenge (for imitation), and John Legend’s “All of Me” music video (considered “Not OK” under sexuality). But “light to moderate human or animal violence,” including contact sports, daily accidents, play fighting, moderate animal violence, and a “light display of blood or injury” are considered OK.

“I thought this rating made sense until I got into the examples,” the rater told BuzzFeed News. "I don't usually try to internalize the actual examples because they don't make sense and aren't consistent — I just decide if I want my child to see the video or not.”

Last month, one creator making money off disturbing kids videos told BuzzFeed News, “Honestly, the algorithm is the thing we had a relationship with since the beginning.” The raters’ guidelines that BuzzFeed News obtained offer insight into the data that trains YouTube’s algorithms, and how it might encourage the continued creation of such videos.

The guidelines are dated April 26, 2017, labeled version 1.2, and are 64 pages long. A rater told BuzzFeed News that they downloaded the file on Dec. 19, meaning it is in current use in spite of the months-old date on the document.

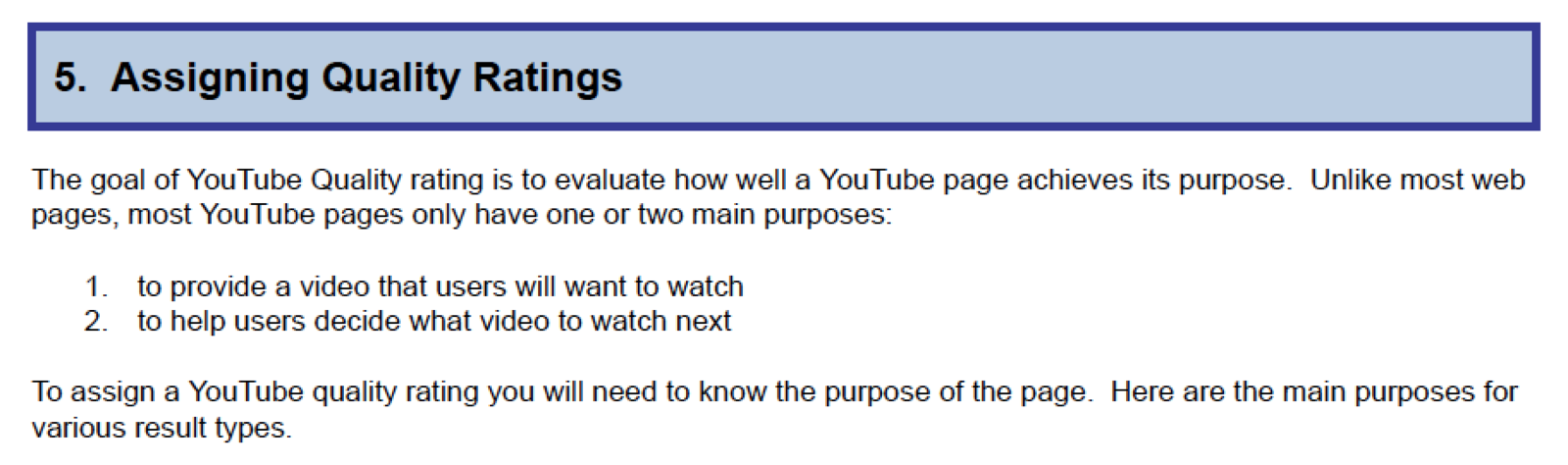

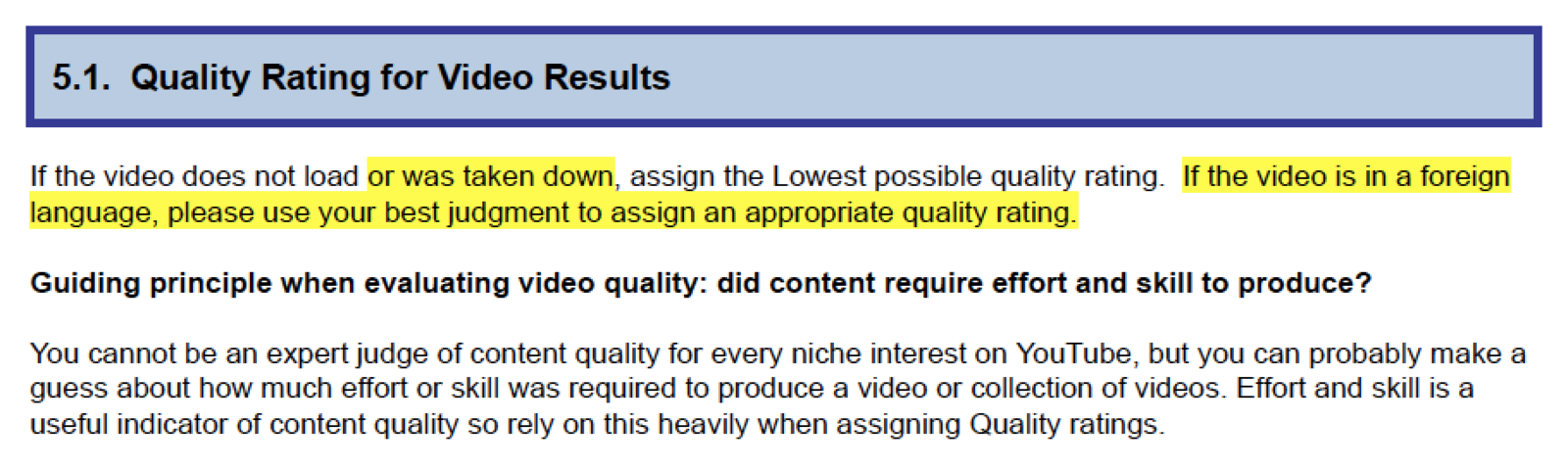

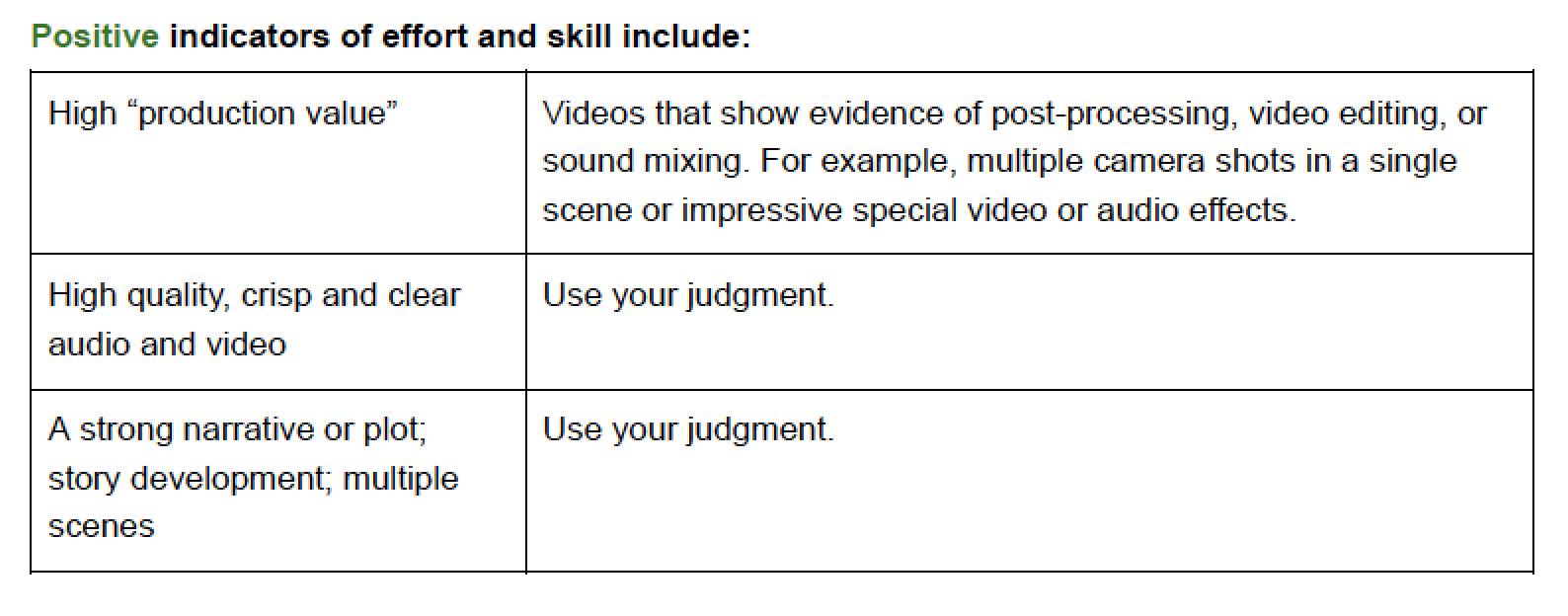

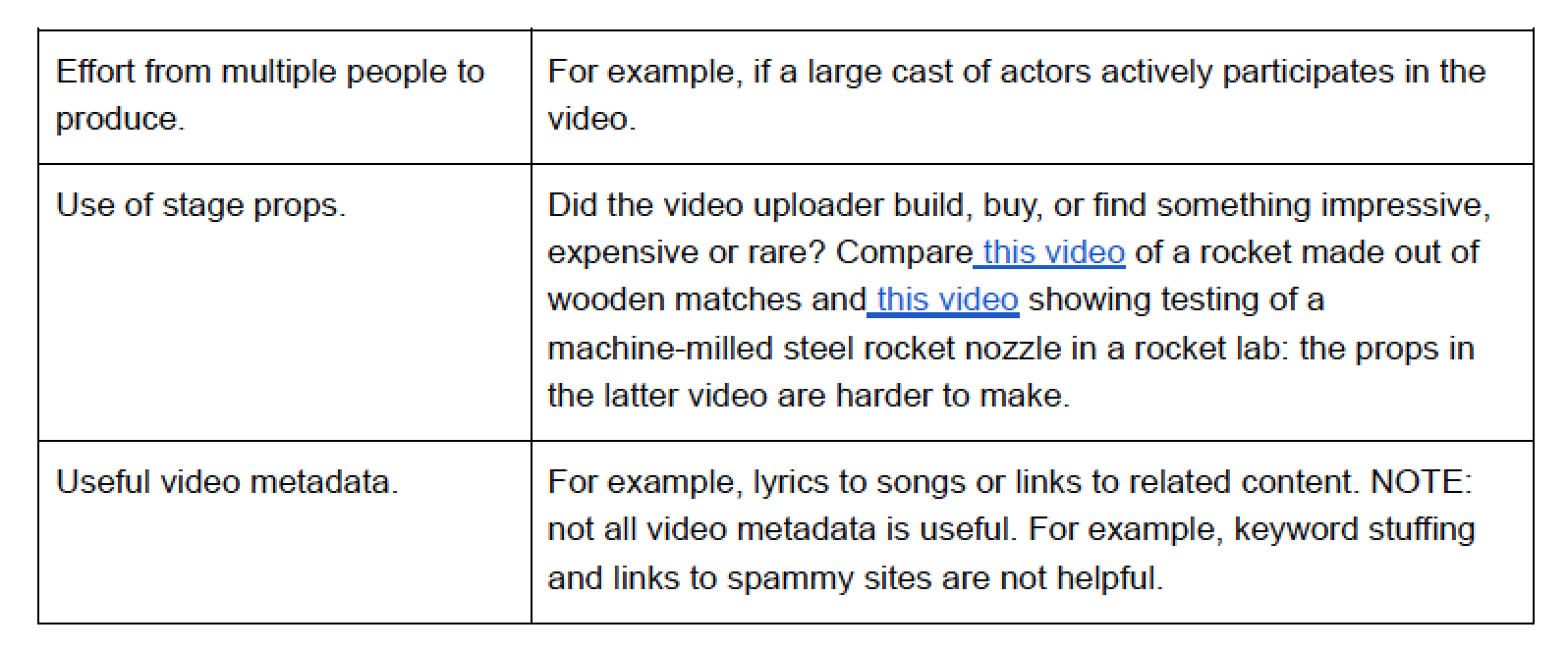

One section covers quality, or “how much effort or skill was required to produce a video or collection of videos,” according to the guidelines. When raters assign quality ratings, these are used “to provide a video that users will want to watch” and “help decide what video to watch next.”

Selman said this is how “quality ratings” could be used in an algorithmic system like YouTube’s. “The raters will have a significant impact on what users will get to see.”

The guidelines instruct raters to assign videos a high level of effort and skill if they have post-processing, video editing, or sound mixing — all familiar characteristics in the hundreds of thousands of questionable and exploitative kids videos uncovered on the platform so far. Often, creators of such “family-friendly” content, which in some cases earned tens of thousands of dollars a month, use original animation or their real kids as actors.

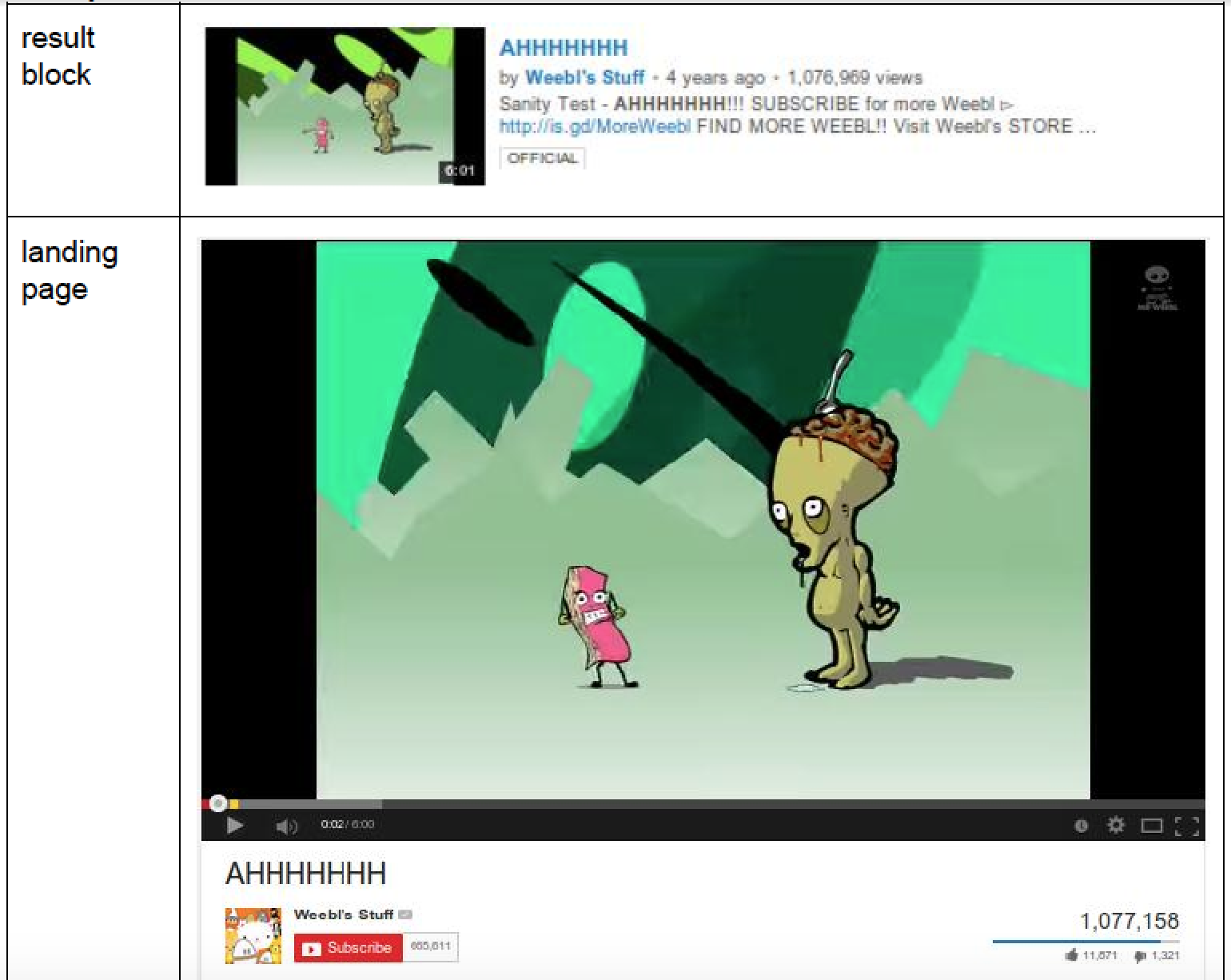

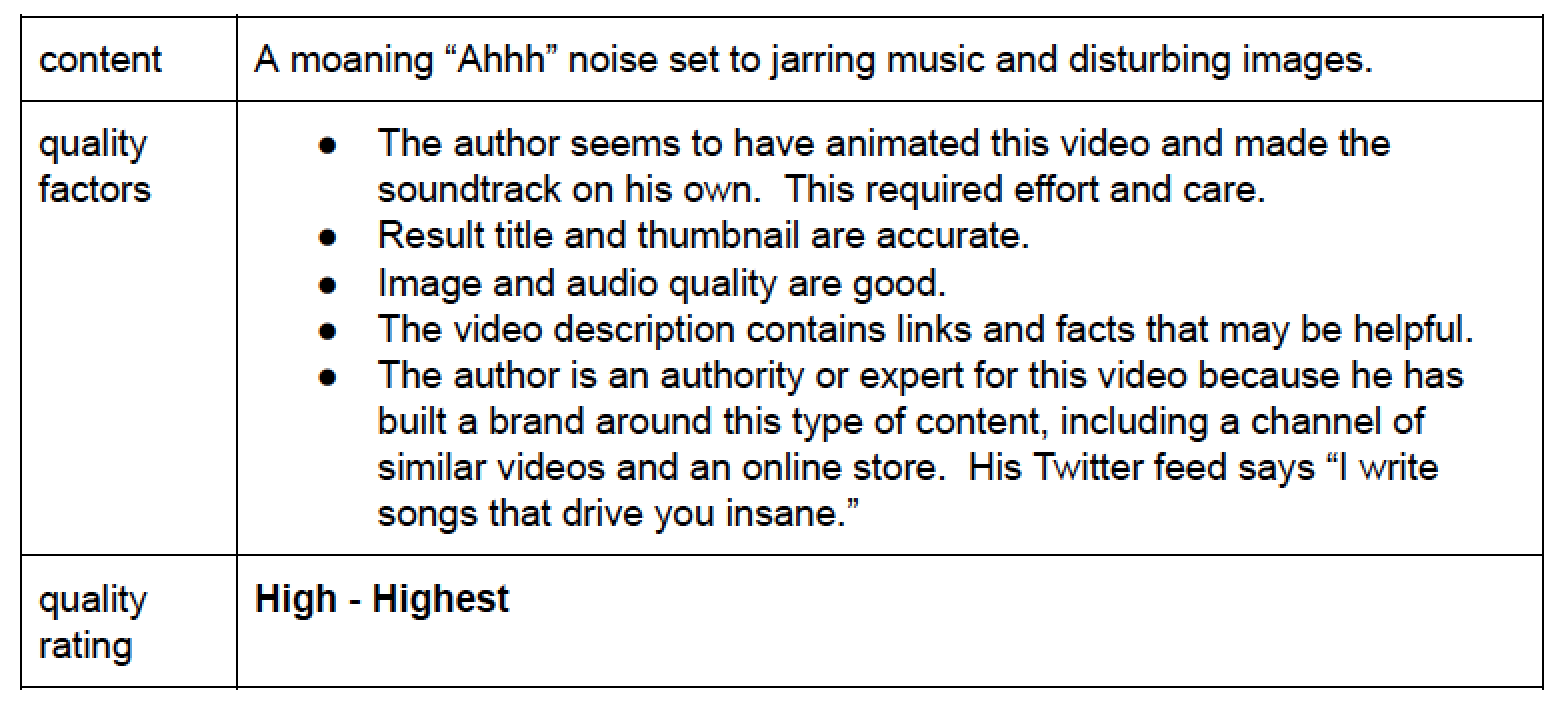

One example in YouTube’s search rating guidelines uses a video with “a moaning ‘ahhh’ noise set to jarring music and disturbing images” as an example. The guidelines instruct raters to rate the video with the highest possible quality. “The author seems to have animated this video and made the soundtrack on his own. This required effort and care,” the guidelines state. “The author is an authority or expert for this video because he has built a brand around this type of content, including a channel of similar videos and an online store. His Twitter feed says, ‘I write songs that drive you insane.’”

These guidelines indicate how a YouTube channel like ToyFreaks, whose videos were shot and edited "with effort and care" that reflected a "brand" of content and often depicted the creator's children in potentially child-endangering situations, could have accumulated tens of millions of views and 8 million followers on the platform. YouTube shut down ToyFreaks last month during the public backlash over exploitative videos on its platform.

"YouTube is like a hydra, you cut off one upsetting channel, and five more have sprung up by breakfast."

“It’s an example of what I call ‘value misalignment,’ which we are seeing all over the content distribution platforms,” Selman told BuzzFeed News. “It's a value misalignment in terms of what is best for the revenue of the company versus what is best for the broader social good of society. Controversial and extreme content — either video, text, or news — spreads better and therefore leads to more views, more use of the platform, and increased revenue.”

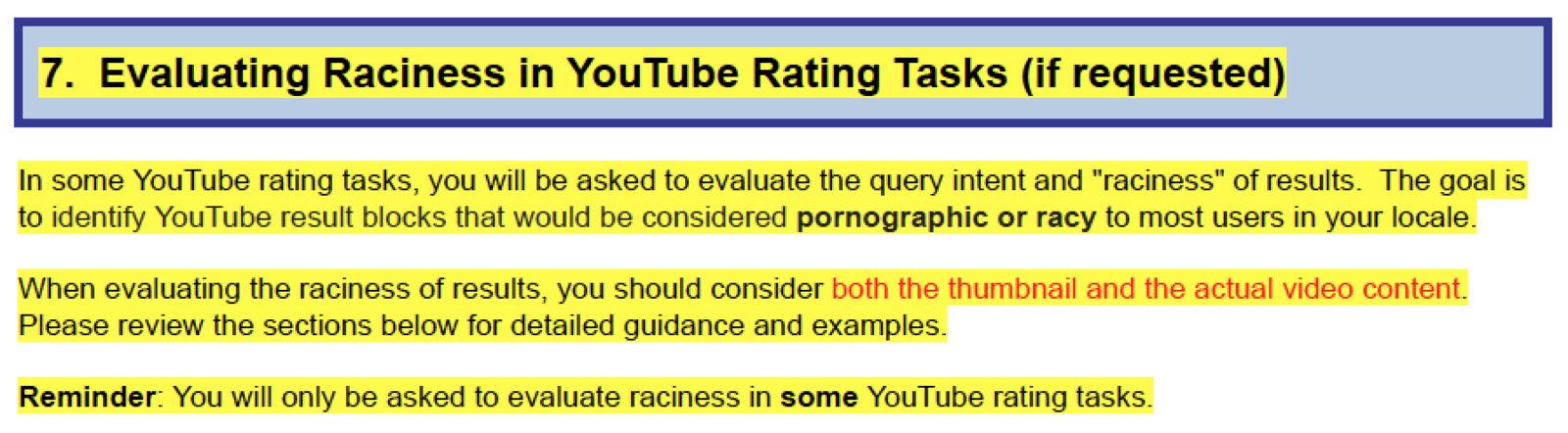

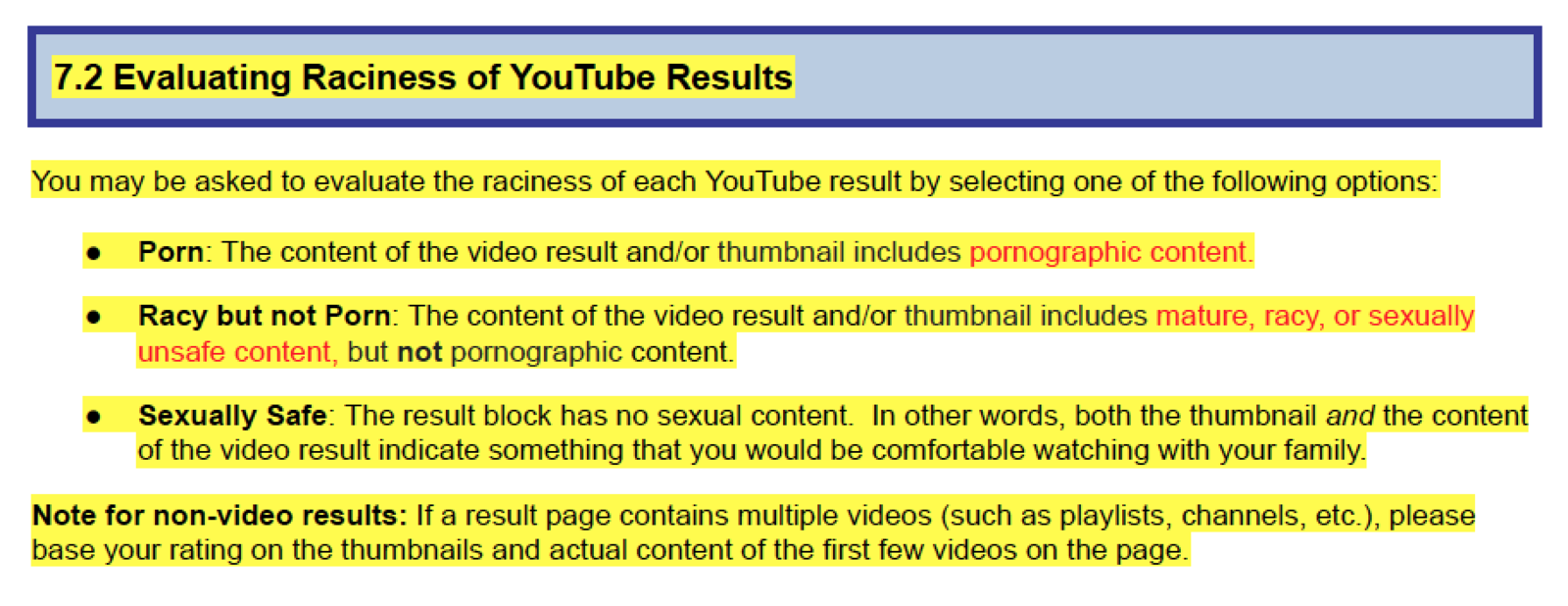

Another section in the guidelines instructs raters on “evaluating raciness of YouTube results (if requested)” and uses three categories: porn, racy but not porn, and sexually safe. In some cases, the guidelines can appear contradictory.

“The [YouTube video] has no sexual content,” the description for Sexually Safe reads. “In other words, both the thumbnail and the content of the video result indicate something that you would be comfortable watching with your family.”

One example of “sexually safe” material, according to the YouTube guidelines, would be a video called “Six Things You Need to Know Before Oral Sex,” because it “discusses a sexual act in an informative and sexually safe manner.”

Meanwhile, a video called “Blind Date Foot Fetish” was also marked “sexually safe,” with the explanation, “Content does not depict sexual behavior, and most users would not consider it racy or sexually suggestive.” It shows a pair of zoomed in women’s feet being rubbed and tickled with a brush.

But in spite of being considered “sexually safe,” according to YouTube’s search rater guidelines, the foot fetish video today shows an interstitial notice before playing. “This video may be inappropriate for some users,” the message says, and prompts the user to click through to continue viewing the video.

Even though search raters are not the primary group tasked with flagging content for removal on YouTube, they expressed frustration at some of the strict limitations on the steps they can take. “Even if a video is disturbing or violent, we can flag it but still have to say it’s high quality [if the task calls for it],” one rater told BuzzFeed News. “Another issue is that we have a lot of tasks that ask us to rate the sexual content of videos with no corresponding tasks for violence. A lot of us feel weird marking videos as ‘sexually safe’ that are still filled with graphic violence.”

There is often no “official” way to report disturbing content on the job — apart from child pornography — if the task doesn’t explicitly call for it, two raters told BuzzFeed News. One rater said they once encountered a disturbing video and flagged it as unsafe, but they could not flag the disturbing host channel as a rater. They had to report it to YouTube as a regular user.

“I did so, and got a blanket ‘Thanks, we’ll look into it’ from YouTube,” the rater said. “I don’t know if the channel was taken down, but YouTube is like a hydra: You cut off one upsetting channel, and five more have sprung up by breakfast.”

Raters also described how YouTube puts severe time limits on tasks, which makes assessing sensitive video content more difficult. “We definitely don’t have time to go through longer videos with any detail, so some of them are closer to snap judgments,” one rater said. “I’d say one minute per video is generous.” Some videos that raters review are hours long.

"We definitely don’t have time to go through longer videos with any detail, so some of them are closer to snap judgments."

If raters take too long on the tasks, the contractor company may impose penalties, according to three raters who spoke with BuzzFeed News. “Received another email telling me my rate per hour was too low,” one rater wrote in a public message board where raters gather to exchange tips and tricks. “Tonight … I just randomly assigned ratings without much thought into them. YouTube videos? Yep, not watching those — just putting down ratings.”

Raters are given the choice to skip tasks if they’re uncomfortable watching offensive content — or even opt out of disturbing content completely. They can also skip tasks if, say, the video did not load, the query is unclear, the video is in a foreign language, or the rater doesn’t have enough time. But multiple raters told BuzzFeed News they feared facing hidden penalties, such as being locked out of work, if they chose this option too often. “It’s not clear which reasons [to skip tasks] are legitimate and how many is too many released tasks,” a rater told BuzzFeed News.

Complicating matters for this system is the inherent instability of the jobs of raters, which takes its toll on workers. Nearly all of the raters BuzzFeed spoke to for this piece are employed part-time by the contracting company RaterLabs, which works with Google. (RaterLabs limits workers’ hours to 26 a week.) The raters do not receive raises or paid time off, and they have to sign a confidentiality agreement that continues even after their employment ends. There is no shortage of anecdotes among these workers about finding themselves dismissed suddenly via a brief email, without warning and without an explanation.

And the field is consolidating, with a few companies dominating the space and possibly driving wages down.

In late November, the rater contractor Leapforce was bought out by a competing company called Appen. Appen is known among raters to have one of the lowest hourly rates in the business — as low as $10 per hour, compared to rates that go up to $17 per hour for other contractors. Leapforce owns Raterlabs.

“The work [Appen does] for our customers is project-based and starts and stops depending upon customer requirements,” an Appen spokesperson said in an emailed statement to BuzzFeed News. “This means that there are times when projects end with very little notice, so the work we assign people ends quickly. The variable nature of this work is similar to any part-time, contract or gig work.”

In August, three months after Wired published a report on the employment conditions at ZeroChaos, one of the major companies contracted by Google for ad quality rating work, Google abruptly ended its contract with them — even though ZeroChaos had promised some of its contractors work lasting until 2019. Possibly tens of thousands of workers lost their jobs.

“It feels like the articles are resulting in worse conditions or job loss,” a rater told BuzzFeed News. “That’s why some raters have reacted negatively to seeing [our work described] in the press.” Several workers declined to speak to BuzzFeed News for this story, citing a fear of being fired from their jobs in retaliation.

YouTube, for its part, said in a statement that it strives to work with vendors that have a strong track record of good working conditions. “When issues come to our attention, we alert these vendors about their employees' concerns and work with them to address any issues,” a company spokesperson wrote in an email to BuzzFeed News.

Since the public backlash against YouTube over unacceptable children’s content on its platform, the company has taken steps to combat the problem. It said it would soon publish a report sharing aggregated data about the actions it took to remove videos and comments that violate its policies. The company also promised to apply its “cutting-edge machine learning” that it already uses on violent extremist content to trickier areas like child safety and, of course, said that it plans to have more than 10,000 human reviewers evaluating videos on the platform in 2018. But YouTube did not comment on how it plans to revise its evaluation guidelines for its expanded workforce. ●