Facebook is bringing its artificial intelligence expertise to bear on suicide prevention, an issue that's been top of mind for CEO Mark Zuckerberg following a series of suicides livestreamed via the company’s Facebook Live video service in recent months.

“It's hard to be running this company and feel like, okay, well, we didn't do anything because no one reported it to us,” Zuckerberg told BuzzFeed News in an interview last month. “You want to go build the technology that enables the friends and people in the community to go reach out and help in examples like that.”

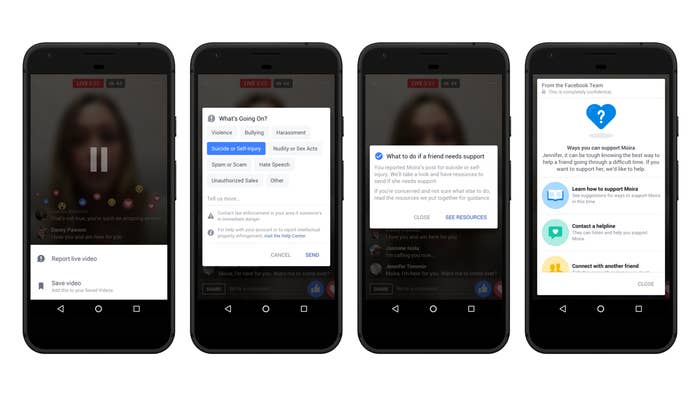

Today, Facebook is introducing an important piece of that technology — a suicide-prevention feature that uses AI to identify posts indicating suicidal or harmful thoughts. The AI scans the posts and their associated comments, compares them to others that merited intervention, and, in some cases, passes them along to its community team for review. The company plans to proactively reach out to users it believes are at risk, showing them a screen with suicide-prevention resources including options to contact a helpline or reach out to a friend.

“The AI is actually more accurate than the reports that we get from people that are flagged as suicide and self injury,” Facebook Product Manager Vanessa Callison-Burch told BuzzFeed News in an interview. “The people who have posted that content [that AI reports] are more likely to be sent resources of support versus people reporting to us.”

Facebook’s AI will directly alert members of the company’s community team only in situations that are “very likely to be urgent,” Callison-Burch said. Facebook says a more typical scenario is one in which the AI works in the background, making a self-harm–reporting option more prominent to friends of a person in need.

While suicide prevention is a new and unproven application for artificial intelligence, Dr. John Draper, project director for the National Suicide Prevention Lifeline, a Facebook partner, says it's promising. “If a person is in the process of hurting themselves and this is a way to get to them faster, all the better,” he told BuzzFeed News. “In suicide prevention, sometimes timing is everything.”

In addition to AI monitoring for indications of self-harm, Facebook is rolling out a few other features as well. It will make a number of suicide-prevention organizations available for chats via Messenger, and it will present suicide-prevention resources to Facebook Live broadcasters who are determined to be at risk. With a tap, a broadcaster can access suicide-prevention resources, visible on their screen.

Facebook's decision to maintain the live broadcast of someone who's been reported as at-risk for self-harm is clearly fraught. But the company appears ready to risk broadcasting a suicide if doing so gives friends and family members a chance to intervene and help. “There is this opportunity here for people to reach out and provide support for that person they’re seeing, and for that person who is using live to receive this support from their family and friends who may be watching,” said Facebook researcher Jennifer Guadagno. “In this way, Live becomes a lifeline.”

The debut of Facebook's new suicide-prevention tools come amid growing concerns about the company's influence, and could raise concerns about digital privacy. But for Facebook, which has been working hard to take a more direct role in stopping suicide, AI could be a big step forward in evaluating potentially suicidal content and and making it easier for people to help friends in need. As Zuckerberg wrote in a Feb. 16 letter, "Looking ahead, one of our greatest opportunities to keep people safe is building artificial intelligence to understand more quickly and accurately what is happening across our community.”