One of the marks of a major American cultural-commercial event, as long as winners and losers are being declared, is that large numbers of people will be putting money on it. Where TV money flows — toward the Super Bowl, toward March Madness — gambling money tends to follow. Sometimes it even feels like betting is the only thing propping up otherwise-dying practices. How else do you explain Floyd Mayweather, competing decades past his sport's glory days, still pulling in record-setting purses and showing up on highest-paid athletes lists? Or how the Kentucky Derby leads SportsCenter all while the crowd at Churchill Downs seems like it's from the same era as when Secretariat ran? An event that can attract a good wager, more often than not, finds a way to stick around.

So perhaps it is no coincidence that the Academy Awards, and the associated office Oscar pools, are the one event that can draw audiences like no other non-football event.

Yet compared to forecasting something like the Final Four, the Academy Awards, particularly in the major categories that most people care about, are rather predictable. Unlike sports, our other favorite gambling outlet, the Oscars are not the result of any future — and uncertain — competition being put on that night. They are more like guessing the results of an election that has already taken place.

So even if a small group of voters are making a collection of highly subjective judgments, the results of those judgments are predictable if there are some external indications of the way they are leaning. In politics, these indications are polls. For the Oscars, the indications are other award shows.

My method in generating the following forecasts was fairly straightforward. I gathered data going back to 1996 on the winners of each pre-Oscar award to determine its predictive power. Each category considered a separate set of relevant precursor awards with weights based on their historical performance in that category.

The most predictive awards in each category varied. For example, the top award at the Directors Guild Awards has been the strongest signal of success in the Best Picture category, matching the Oscar winner 78% of the time. For Best Director, the Critics Choice Movie Awards (CCMAs) were the most predictive at 78% as well. Each award was given a weighting according to this percentage, with one exception that I'll discuss later.

I generated forecasts based on how many of these precursor awards each nominee won and how reliable those awards have been at predicting the Oscar winner. Finally, each sum was then balanced by how predictable the category was overall. This means that, all else equal, I was more confident in my results in a category whose precursor awards were 60% accurate on average than in one whose awards were 50% accurate.

The most important awards in my model were those given out by guild associations, whose voting body overlaps the most with Academy voters. High-profile televised awards like the Golden Globes, British Academy of Film and Television Arts Awards, and the surprisingly predictive CCMAs were also given bigger shares. The impact of lower-profile shows, like those given out by critics associations, were minimal except in a few cases (the New York Film Critics Circle and Los Angeles Film Critics Association are significant in a few categories, like Best Actor).

While it is possible to construct a model that adheres too closely to historical data and tells you nothing about the future — this is known as "backfitting" a model — since my inputs are relatively standardized and I've minimized the amount with which I've subjectively tinkered with the model, previous success is more significant. And in that regard my model performs very well, correctly predicting most categories going back the past few years. My results accurately forecasting five of six awards using a similar model were published here last year, with the lone miss coming in the peculiar Ben Affleck-less Best Director category.

This method produces a number of otherwise expected results, hewing close to conventional wisdom. But forecasts are as much about having the right perspective as they are about guessing the winner. It's important to know which nominees are true favorites and which are favorites by just a hair. Most of all, they're about having a more realistic sense of what's most likely to happen.

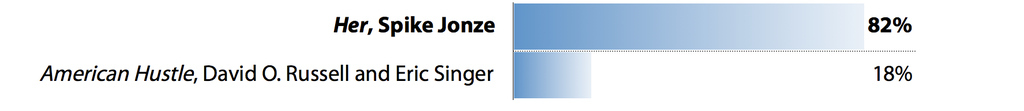

Best Original Screenplay

The first challenge in forecasting the writing categories is accounting for other awards, like the Golden Globes, that make no distinction between adapted and original screenplays. Importantly, my forecast for each type of screenplay only included data from years where the winner came from that category. Where an adapted screenplay wins, for instance, my accounting for original screenplay would show no data for that year instead of a "missed" prediction. This is key because when considered all together, a win is an important signal about that script's chances. Since '96, the Globe winner has won the Oscar in its respective category 14 of 18 times.

What this means for this year is that we have one prohibitive favorite and one leader in a complicated race. Spike Jonze's script for Her should be expected to win in the original category after winning at the Writers Guild Awards, the CCMAs, and the Globes. Of the major awards, Jonze ceded only the BAFTAs to David O. Russell and Eric Singer's script for American Hustle.

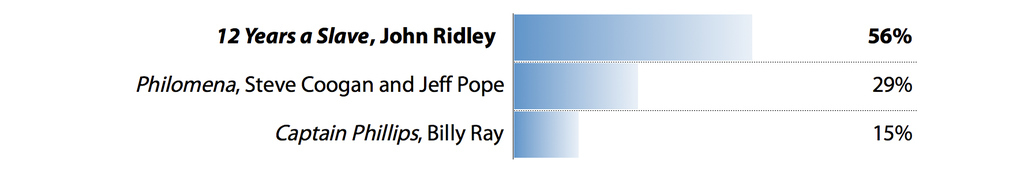

Best Adapted Screenplay

Best Adapted Screenplay is more difficult to predict because of a lack of data. The two conventional front-runners for this award, 12 Years a Slave and Philomena, were ineligible for the WGA ceremony, whose winner in this category has won the Oscar eight of the last nine years. In their absence, Billy Ray won for Captain Phillips.

Forecasting works best not when followed blindly but accompanied by a good dose of context, and in this case, the WGA result seems impertinent. Like an opinion poll that asks a misleading question, a win in a precursor award is less significant if it does not ask the same of the voters as what they will be asked at the Oscars. I was forced to give a smaller weighting to the winner of the often reliable WGA awards, since the revealed preferences of the voters do not mean much if their votes are only revealing a preference for Captain Phillips over Before Midnight.

Without a huge amount of data to work with, John Ridley's script for 12 Years a Slave leads this category. Though it should not be considered a heavy favorite, its win at the CCMAs and the film's strength in other categories gives it an edge.

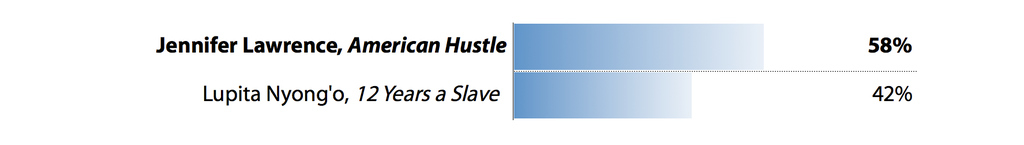

Best Supporting Actress

Lupita Nyong'o and Jennifer Lawrence have traded wins this awards season, making this the most uncertain of all the categories. In Lawrence's favor are her wins at the BAFTAs and Golden Globes, the two awards that have been most reliable at predicting the eventual Oscar winner. In Nyongo's favor are wins at the SAG awards and the CCMAs, along with providing, in her feature film debut no less, one of the most powerful, haunting scenes of the year.

An Oscar win for Lawrence would make her one of the few back-to-back winners in the show's history before she reaches her 24th birthday. As improbable as that sounds, the numbers here suggest she has a slight edge.

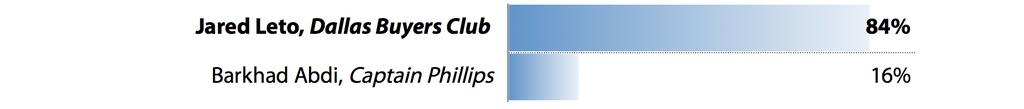

Best Supporting Actor

The Supporting Actor category lacks the drama of the supporting actress category. Jared Leto has swept all but one of the major awards leading up to the show.

Barkhad Abdi's recent BAFTA win notwithstanding, Leto is a huge front-runner to win over Abdi in his film debut in Captain Phillips and Michael Fassbender's manic performance in 12 Years a Slave. Abdi's chances are non-negligible, but this category has been remarkably forecastable in recent years. The last real surprise was seven years ago, when Alan Arkin won for Little Miss Sunshine after, like Adbi, winning only the BAFTA.

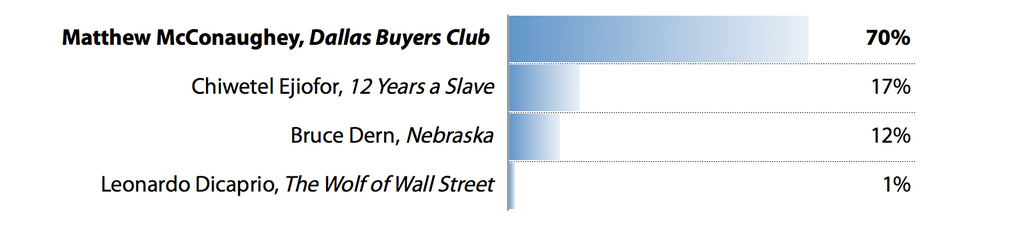

Best Actor

The way the pre-Oscar awards have shaken out, there will be more anticipation as to whether McConaughey can top his Golden Globes acceptance speech than whether he'll be the one up there making a speech at all. After a slow start through the critics awards, McConaughey has won at the SAGs, Globes, and CCMAs. If there is an upset it would come from BAFTA winner Chiwetel Ejiofor, competing at the British award show in a Best Actor category for which McConaughey was not even nominated.

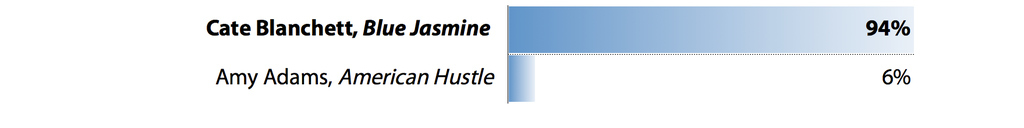

Best Actress

Best Actress also clearly favors one nominee. Five years after playing Blanche DuBois on stage, and a decade after winning her first Oscar, Cate Blanchett's rendition of the distraught DuBois-meets-Ruth Madoff socialite in Blue Jasmine should get her the statue. After accepting every significant pre-Oscar award, it would be a major surprise if she did not win.

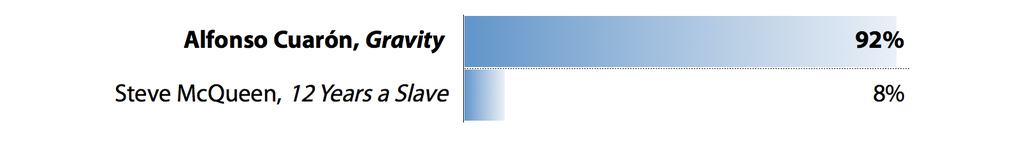

Best Director

The most surprising aspect of this category is the way that Alfonso Cuarón is such a huge favorite for a film that is an underdog to win Best Picture. Though splits can and do happen (and seem to always involve Ang Lee), they usually come as surprises — it's rare for the favorites in two categories to come from different films. Yet Cuarón has won nearly every major directing award to this point, including the top award from the Directors Guild, a signal of an imminent Oscar win nine times out of the last 10.

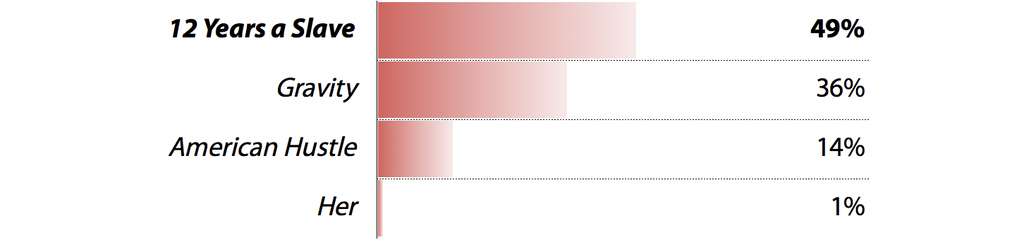

Best Picture

Steve McQueen's 12 Years a Slave is the favorite for the show's biggest award, even if, surprisingly, it is not the juggernaut some have expected it to be. Its wins at the Golden Globes, BAFTAs, and CCMAs give it just about even odds to win.

A more interesting question may be which film is most likely to be its spoiler. For as much as this race has been painted as one between the penetrating, artful, significant 12 Years a Slave and the smooth, fun, free-wheeling American Hustle, pre-Oscar signals suggest that Gravity is a more likely runner-up. Its spot at No. 2 is based on its win at the DGAs, which have been the most reliable predictor of Oscar success; its Best Direction award has been given to the director of 11 of the last 12 Best Picture winners. Gravity also shared the top award from the Producers Guild with 12 Years a Slave, the second most significant precursor award in this category.

If in a year where questions of morality pervaded our discourse around film to an even greater degree, the historically skittish Academy chooses to honor the one film that drew the ire of just about no one (save for one meticulous astrophysicist), few would be shocked. But odds are better that by the end of the night, 12 Years a Slave will be able to lay claim as not only the most important film of the year but also the best.

All in all...

Best Picture: 12 Years a Slave, (49%)

Best Director: Alfonso Cuarón, Gravity (92%)

Best Actress: Cate Blanchett, Blue Jasmine (94%)

Best Actor: Matthew McConaughey, Dallas Buyers Club (70%)

Best Supporting Actress: Jennifer Lawrence, American Hustle (58%)

Best Supporting Actor: Jared Leto, Dallas Buyers Club (84%)

Best Original Screenplay: Her, Spike Jonze (82%)

Best Adapted Screenplay: 12 Years a Slave, John Ridley (56%)