The Periscope link was titled "live sex" and colourful hearts, indicating people were liking the video, flooded the right-hand side of the screen. "This girl is getting raped," a 20-year-old student in London tweeted.

"I beg you, watch the whole thing," she added in another tweet. "There was a point where the girl didn't want sex no more, and the guy was telling her 'one more'."

The Periscope live video, shared on Twitter on 30 March, showed three young men and one young woman engaging in sexual activity in a well-lit bedroom in London. According to some of those who watched it, the males were fully clothed, while the female was only partially dressed. When one of the men was having sex with her, the other two were seen to be watching and recording on their phones, and were often heard laughing, or telling her what she should do.

After being alerted by one of the viewers, BuzzFeed News found and interviewed 15 people who watched the video. Although they publicly tweeted the link, all were willing to recount what they saw only if their quotes were kept anonymous.

"There was big hype over the video, the stream had a good 2.8K views," one 18-year-old student from Hackney, east London, told BuzzFeed News. "People were constantly inviting their friends to watch."

It took some of the viewers a little while to realise that what they were watching live was a serious crime: rape.

While live streaming is not a new phenomenon, the aggressive push by social media companies in promoting their live video platforms over the last year – such as Twitter-owned Periscope, and more recently, Facebook Live – has ignited its popularity.

But the most appealing factor of live streaming – raw content at the touch of a button – is also its biggest threat: The inability of companies to monitor live content has spawned an entirely new set of serious safety and privacy issues for users. The freedom to live-stream just about anything, anywhere in the world, has prompted a new and uncomfortable predicament for social media companies: What should they do if – or when – a crime is being live-streamed on their platform?

As the Periscope video progressed, it was flooded with comments. People disputed the participants' ages – "they look underage" and "they're in year 9" – while others who claimed to recognise the girl began to type her name, calling her a "sket" and a "skank".

But as thousands of people joined up to watch the stream, the mood in the video shifted. Viewers said that, at first, the girl shown on screen appeared to be complicit – then, when she saw that the others were filming, she asked them not to. Viewers say she then told them "no", as well as explicitly saying that she wanted to stop, and that "she was almost passed around".

"I felt helpless," a 19-year-old woman living in west London told BuzzFeed News. "She was going along for a bit at first, then it was clear she didn't want to be there. All I could do was report it and wait. I sat there and watched people talk about it on Twitter, while it just carried on live."

What sets live video apart from pre-recorded or photographed content – and the reason it arguably needs to be treated differently from other content – is that a viewer is intrinsically, in some way or another, involved in the moment. Its viewers are not passive: They may be sitting behind a screen in a different country, but they will also feel a sense of responsibility, even though they are powerless to stop what is happening before their eyes.

"The girl in the video said no and that she didn't want to have sex any more, but the guy was telling her to continue," said the 20-year-old student who tweeted the Periscope link describing it as rape. "A lot of people couldn't see that it was rape because the boys weren't physically grabbing her or hitting her."

"I was more angry at the fact that while they recorded they were screaming 'get this to 2K likes' and 'like up the post,'" she added.

Viewers of the live video were frustrated. They thought that by flagging it, there might be a way they could intervene while the apparent crime was in progress – maybe they could alert authorities to stop it.

But even after the stream ended, viewers who were horrified that they had been unable to step in discovered that there was also little they could do once it had finished.

Soon after streaming the incident – and after many of the Periscope comments and tweets described the video as rape – the person who filmed the footage deleted his Periscope account, as well as his Twitter account. If a user deletes a Periscope video within 24 hours of airing, the company has no copy of it. That means there is now no recording of the live stream on Periscope's servers, and so if someone did want to take the case to police, there would be no video evidence available.

Periscope told BuzzFeed News on two occasions that for privacy and security it could not comment on individual cases, and so the company was unable to confirm whether it was aware of such a video, or whether it had been alerted to the video by users.

Instead its parent company, Twitter, directed BuzzFeed to the terms and conditions regarding inappropriate, graphic, or spam content, outlined on Periscope's website. "Periscope is about being in the moment, connected to a person and a place," it reads. "This immediacy encourages direct and unfiltered participation in a story as it's unfolding. There are a few guidelines intended to keep Periscope open and safe. Have fun, and be decent to one another."

The company states it gives law enforcement the option to make an "emergency disclosure request" to access videos of criminal activity, but only if there is an "exigent emergency that involves the danger of death or serious physical injury to a person that Twitter may have information necessary to prevent." Rape and sexual assault were not listed among its priorities for emergency requests.

Marina Lonina, who is accused of live-streaming the rape of her friend, and Raymond Boyd Gates.

The London live stream was not an isolated incident. Last week, Raymond Boyd Gates, 29, was in court having been charged with the rape of a 17-year-old girl in Ohio. What made the case so shocking and unusual was that one of the girl's friends, Marina Lonina, 18, was in the room at the time and live-streamed the alleged attack to an audience online.

Gates and Lonina have pleaded not guilty to rape and sexual battery, among a number of other charges. The prosecutor said Lonina became excited by turning the attack – which she broadcast on Periscope – into a public spectacle, responding to the comments left by viewers.

"She got caught up in the likes," the prosecutor told the court.

Columbus police were able to obtain a copy of the Periscope video in which they say the girl appears to struggle and can be heard screaming "no, it hurts so much" and "please stop" several times.

Police say they were notified of the alleged assault not by Periscope's monitoring team, but by a friend of Lonina's, who was watching the live stream in a different state.

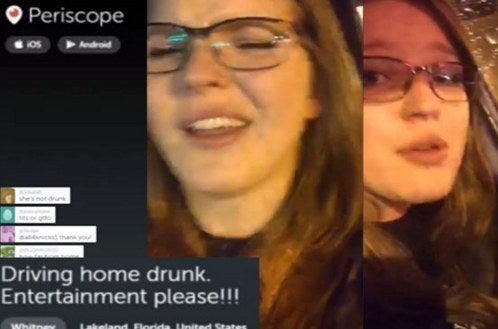

In recent months, a spate of criminal activity has been documented on live-streaming apps. Last October, a woman in Florida used Periscope to broadcast herself drink-driving. She was arrested after one of her viewers reported her to police. Then in November, a mother from the UK revealed in a harrowing interview that she was beaten and raped daily, and that her abuser aired the abuse live on the internet. At least one crime has been unintentionally live-streamed: Three weeks ago, a man in Chicago was shot while filming himself on Facebook Live. It is thought to have been the first time someone has been shot while live on Facebook.

And on Tuesday, footage of a brawl between dozens of teenagers outside a shopping centre in London was broadcast live on Periscope. Viewers of the eight-minute video, which showed people fighting and spitting on each other, shared the stream with friends on Twitter.

For the majority of live video users, the tool is harmless – a fun way to share intimate moments of their lives at the touch of a button. Apps like Periscope, Meerkat, Twitch, and Facebook Live host hours upon hours of footage showing a glimpse into users' personal lives. On Periscope, people are watching 40 years' worth of live video every day. Snapchat – which introduced its Live Stories feature last year – has 100 million daily active users, with 8 billion video views each day.

In a recent interview with BuzzFeed, Facebook CEO Mark Zuckerberg spoke about his excitement over the "raw" experience live video offers. Nothing can be planned with live video, Zuckerberg stressed, making it great for anybody to share "visceral" content, especially "young people and teens".

"Because it's live, there is no way it can be curated," he said. "And because of that it frees people up to be themselves. It's live; it can't possibly be perfectly planned out ahead of time."

Raw video's lack of curation is seen as a benefit, not a hindrance, to both Twitter and Facebook, two of the largest platforms offering the service. But with the colossal amount of content streamed every day, the question looms of whether social media giants can be expected to keep on top of possible crimes broadcast live on their platforms.

Both Twitter and Facebook have guidelines and a flagging system in place that aims to filter out graphic content such as pornography or overtly sexual content, violence, and illegal activity. However, the current moderation processes for flagged content at social media companies are notoriously slow and flawed. It can often take days, or weeks, for posts to be taken down, posing an obvious problem for live video considering the realms of offensive, violent, and criminal activity that could potentially be aired to an audience of thousands in real time.

Facebook told BuzzFeed News the company is working to improve its current reporting system. On its platform alone, users flag more than 1 million items of content for review every day. (Facebook hasn't stated what percentage of that is from live video.) The company says it wants to build stronger systems to respond efficiently to live streams, so it can become less reliant on users to hit the report button. Specifically, Facebook says it is planning to hire more people to review reported content.

"We believe the vast majority of people are using Live to come together and share experiences in the moment with their friends and family, so we want the Live experience to be as immediate as possible," a spokesperson told BuzzFeed News. "We encourage anyone watching a Live video to report violations of our Community Standards while they are watching; they don't have to wait until the Live broadcast is over."

"We've been working on ways to improve the Live reporting function," the spokesperson added. "We've been aggressively growing the global team that reviews these reports and blocks violating content as quickly as possible."

It's unclear whether or not more bodies to monitor live video will make a significant dent in criminal or inappropriate activity being aired live. Neither Facebook nor Twitter say they have a fast-tracked approach for removing flagged live-streamed video over content that's already been uploaded.

Daphne Keller, the director of intermediary liability at the Stanford Center for Internet and Society, recognises that the current systems in place for flagged content are slow, and says it would be "sensible" for companies to prioritise live video over older content to some degree.

"If someone thinks they are seeing a crime in the moment, like rape, it makes sense for that content to go to the front of the queue," Keller told BuzzFeed. "But the complaint system is slow, manual, and gets a lot of spam, so if you're processing that while trying to address a real emergency in real-time, you're faced with a lot of complications."

Keller says that even when a company comes across illegal content, the problem isn't removing it – that part, she says, is easy. The issue is knowing what to do next.

"If you're one of these companies, and you know this illegal thing is going on, taking it down isn't hard – getting content off the internet is almost secondary in that situation. Instead, the high priority is to do what you can to let the local law enforcement know what's going on. If you are a user and you see a live feed of crime, the first thing you want to happen is to alert the local police of where it's happening, if the location is known.

Companies can make that easier. Keller says the reporting system could have an option for 'urgent reaction' in live video.

Companies are then hit with a second glaring problem: the type of flagged content that is chosen for removal, and whether some types of content should be prioritised over others. For example, would social media companies respond to a report of a live video of sexual assault as urgently as, say, a robbery or a murder?

Social media companies do not publish details of their internal content moderation guidelines, but in some cases, a hierarchy of what they choose to respond to quicker already exists. For example, Facebook has a fast-track alert for child exploitation, required by law, and another for suicide. Still, as it stands, there is no clear process – on any platform, whether that be Facebook, Twitter, or Snapchat – to flag and remove videos of rapes in progress.

Soraya Chemaly, a writer whose work focuses on free speech and the role of gender in culture, told BuzzFeed News that one of the problems is that moderation is a human decision-making process, and requires cultural fluency and an assessment of context. Details of moderation practices are routinely hidden from public view, she says in a recent piece, and as a result, very little is known about how these companies set their policies.

"In theory, graphic depictions of violence, or any violence, are in violation of guidelines of most mainstream platforms," Chemaly told BuzzFeed News. "At Periscope, for example, they have rules barring pornographic and overtly sexual content, as well as explicitly graphic content or media intended to incite violent, illegal, or dangerous activities. However, user guidelines exist separate and apart from internal processes for moderating the content and the company will not comment publicly about their processes."

The live-streaming of criminal activity, and the moderation of such content, remains one of the most pressing issues faced by social media companies that host it. It also poses a number of legal questions that haven't been answered before: Could a viewer of one of these streams be called as a witness if the case ends up in court? How much responsibility, if any, do companies bear for the content of live videos? And if someone decides to live-stream criminal activity they see in public, could they be prosecuted?

Companies pushing their users to embrace live video will be forced to consider whether they need a different or more advanced way of monitoring and removing illegal footage. Keller points to a debate happening within tech circles over whether companies should be proactively searching for content that violates guidelines, or waiting for it to be flagged.

Though this new technology remains open to abuse, she is optimistic about the future of live video and its role in promoting transparency and insight into what's happening in the world.

"Criminals can live-stream an assault, but victims are also using live stream to record things like police brutality," Keller said. "Say you're recording police brutality in the US with live video, which is being streamed to an immediate audience – smashing the phone won't stop anything if the video is remotely backed up and seen by viewers already, and that protects you and makes your evidence of wrongdoing valuable. The streaming features on Twitter and Facebook are similar to those efforts – they're trying to find a way of immediately putting something on the record.

"With that, there is great potential with live video for showing or letting the world know about something – whether it's good or bad."

CORRECTION

Marina Lonina and Raymond Boyd Gates have pleaded not guilty to the charges against them and are awaiting verdicts. An earlier version of this post said they had been convicted.